Deep Learning 1: How we got here

The Analytical Engine, Symbolic AI, Perceptron, Birth of Backpropagation, Deep Learning, Modern AI

As a machine learning practitioner, you’ll be bombarded with stupid questions like “Will AI take over the world”, and other nonsense like that. The focus of this Deep Learning series is to make you skilled with it,

so that you can separate the noise from the signal.

1 - The Analytical Engine

This is the birthplace of machine learning. In the 1640s, there was an extremely gifted mathematician named Blaise Pascal.

Yes, he’s the same guy that the Pascal Triangle is named after.

He wanted to see if it’s possible to build a mechanical calculator that would be able to handle extremely repetitive addition, subtraction, multiplication, division questions. Effectively the invention relied on a bunch of gears inside the machine that would handle things like “carry over” in the exact same way a human would!

Although the machine was scrappy, it did demonstrate something quite important. There are indeed tasks that humans do, which a machine could do better, and faster.

In the 1840s, an inventor showed up, and wondered if instead of just handling basic arithmetic, would we add in some additional things it like do…. like a loop, or conditional expression (if statements). He laid the groundwork, but unfortunately, the machine was never built as it was too complex…. but, he did prove that with mathematics it is indeed possible.

The name of his machine was the “Analytical Engine”

2 - Symbolic AI

In the 1950s, there were some computer science researchers that wondered if you could get a computer to learn in a similar way that a human mind could. They also came up with the precise definition of AI.

Here it is for you below:

The effort to automate intellectual tasks normally performed by humans

From the 1950s to the 1980s, the general consensus among the researchers was that the correct way to implement artifical intelligence was to write a program with an extremely large set of rules for it to follow.

The thought being, if there are hundreds of thousands of rules for the program (code) to follow, it is complex enough to basically do anything a human can. Thus, the goal of the above definition of AI was achieved.

This approach to AI is called the “Symbolic AI”.

2 - The Perceptron

In the 1950s, there was a researcher who was a fan of biology. He proposed something called a perceptron. The idea is that a perceptron would basically simulate a neuron in a human brain. Every perceptron would have the following:

Input Layer: Accepts Features

Weights: Multiplies Inputs by learned values

Threshold Function: Basically the output

When this was tested, it was found out that a perceptron could mimic & learn linear data no problem. Unfortunately, after running several tests, it was found out that the perceptron could not learn non-linear data, so interest in the perceptron & neural networks basically fell off a cliff, in the 1970s. This period in time is known as the “AI Winter”.

3 - Birth of Backpropagation

In the 1980s, Researchers realized that by using the chain rule of calculus, you could basically build a multi-layered neural network. The chain rule would be dependent on the number of layers in your neural network, but…. it was now actually possible to build a multi-layered neural network, and actually train it.

This is the birth of the backpropagation algorithm that calculates the weights for each of the perceptron in your neural network. If you really like maths, you can see the video below of a guy doing the entire chain rule calculation for a neural network:

There was a lot of excitement during this period, and people even realized that within the perceptron itself, you didn’t need to make a linear function, but instead, you could work with a lot more complex functions like Relu.

Unfortunately, the hype quickly died down again, when people realized that the hardware at that time (1980s) was trash, and people would have to wait extremely long just to see the results.

For example, a simple 3 layered neural network could take about a week to train (lol).

And, just like that, the hype for Neural Networks & AI died down, once again.

4 - Deep Learning

In the 1990s, there was a researcher by the name of Yann LeCun, and he came up with the idea (and the algorithm) for a convolutional layer for a neural network. Effectively, the idea of a convolutional layer is that would be like a small sliding tray over an image, and it could then use the backpropagation algorithm from above to find underlying patterns in that image.

The mathematics was indeed correct, and on point….. But, the hardware was trash, and so this was basically ignored the most computer scientists.

In the 2010s, researchers used the new hardware to give neural networks another try, more specifically to see if Yann’s Convolutional Neural Network could do a good job at image recognition. The results were absolutely phenominal, and lead to the birth of the AlexNet (very popular convolutional neural network for computer vision).

After AlexNet, people went back to several old neural network research papers and came across one that talked about the Long Short Term Memory (LSTM), and this lead to reinforcement learning, and recurrent neural networks.

5 - Modern AI

Another research paper in the 1990s talked about a concept of something called a “Transformer”. Again, due to the trash hardware of the 1990s, it was quickly ignored, and people moved on…. Until 2017, when Google said “fk it, why don’t we implement it, and see what happens”.

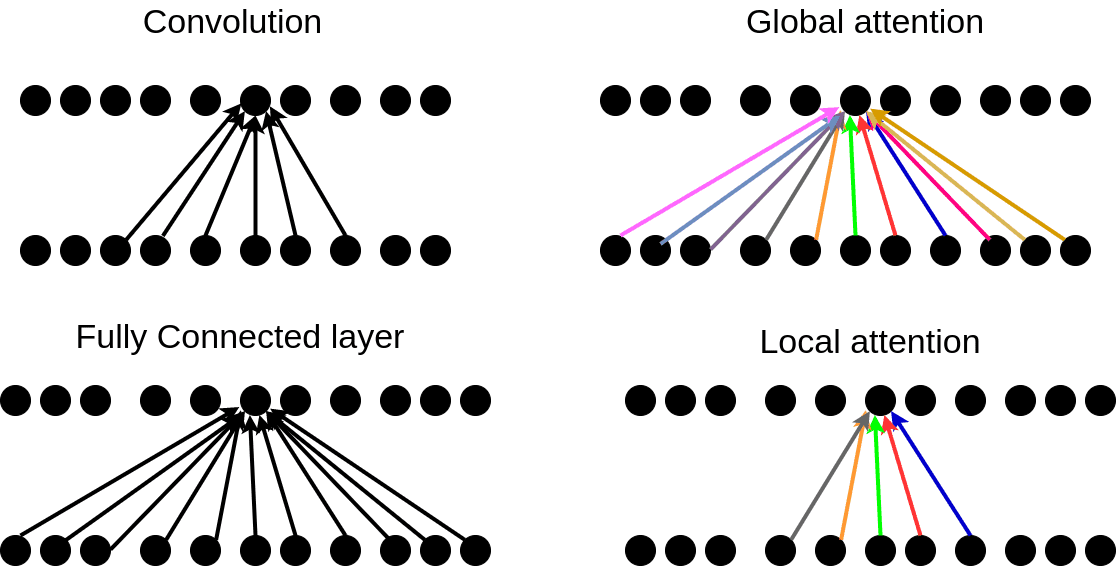

This basically lead to a new architecture called the Transformer, it introduced the concept of something called the attention mechanism. Instead of processing data sequentially (like Recurrent Neural Networks), the Transformer allowed the model to look at all parts of the input at once, making it insanely faster and better at understanding context.

This breakthrough led to the creation of Large Language Models (LLMs) like GPT-3 and ChatGPT.

Meanwhile, researchers messed around with CNNs (again) and discovered they could also be used for tasks like video processing, and object detection with mind-blowing accuracy.

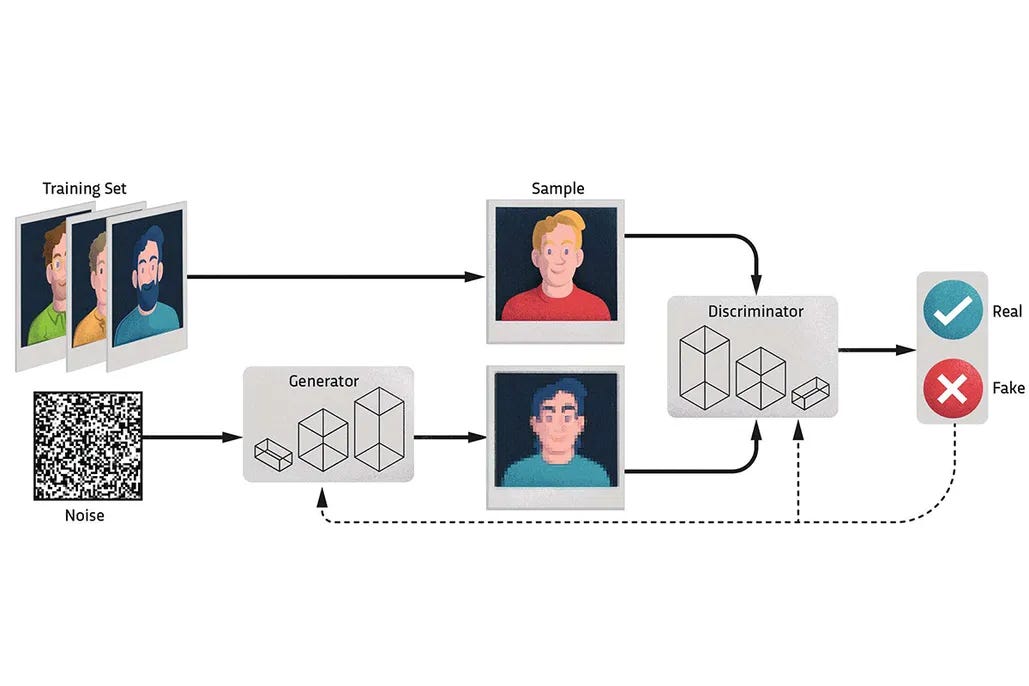

We also got the birth of Generative models like GANs (Generative Adversarial Networks) and text-to-image models like DALL-E and Stable Diffusion. These GANs brought us into an era where AI could create. These models can generate photorealistic images, design artwork, or even produce music, fundamentally changing creative industries.

And voila, that’s how we ended up here today.