Before we start, you should brush up on how classes, and instantiating an object in Python works. You can look at a brief overview here

1 - Subclassing the model class

Picking up from where we left off in part 1, the last model-building pattern you should become familiar is the most advanced one, it’s called: Model subclassing. The basic idea is we will use Python’s object oriented programming (classes) in order to create our neural network.

Let’s start off by rewriting our old neural network that dealt with priority, department in our new methodology.

Here’s the old code from the last post:

import numpy as np

vocab_size=10000

num_tags=100

num_departments=4

#data:

title = keras.Input(shape=(vocab_size,), name='title')

text_body = keras.Input(shape=(vocab_size,), name='text_body')

tags = keras.Input(shape=(num_tags,), name='tags')

features = keras.layers.Concatenate()([title, text_body, tags])

features = keras.layers.Dense(64, activation='relu')(features)

priority = keras.layers.Dense(1, activation='sigmoid', name='priority')(features)

department = keras.layers.Dense(

num_departments,activation = 'softmax',name = 'department'

)(features)

model = keras.Model(inputs = [title,text_body,tags],

outputs = [priority, department])Now, here’s what it looks like as a subclassed model

class CustomerTicketModel(keras.Model):

def __init__(self, num_departments):

super().__init__()

self.concat_layer = keras.layers.Concatenate()

self.mixing_layer = keras.layers.Dense(64, activation = 'relu')

self.priority_scorer = keras.layers.Dense(1, activation = 'sigmoid')

self.department_classifier = keras.layers.Dense(

num_departments, activation = 'softmax'

)

def call(self, inputs):

title = inputs['title']

text_body = inputs['text_body']

tags = input['tags']

features = self.concat_layer([title,text_body,tags])

features = self.mixing_layer(features)

priority = self.priority_scorer(features)

department = self.department_classifier(features)

return priority, departmentHighly recommend you spend a little bit of time comparing the API template with the subclass template, you’ll quickly see it’s very similar, just minor formatting changes.

Once you’ve defined the model, you can instantiate it. You can instantiate it, the same way as you would instantiate a python class.

model = CustomerTicketModel(num_departments = 4)1.1 Compiling a Model subclass

You can compile, and train a model subclass, the exact same way as a Sequential, or a functional API model:

model = CustomerTicketModel(num_departments=4)

model.compile(optimizer = 'rmsprop',

loss = ['mean_squared_error', 'categorical_crossentropy'],

metrics = [['mean_absolute_error'], ['accuracy']])

model.fit({'title':title_data,

'text_body':text_body_data,

'tags':tags_data},

[priority_data, department_data],

epochs=1)The model subclassing methodology is the most flexible way to build a neural network. This method allows you to build models that cannot be expressed as a directed sequence (1 by 1) graph of a layers. For example, if I asked you to build me a neural network with 10,000 layers using the Sequential/API methodology, could you do it?

In the subclassing methodology, you can just run a for loop, and voila, job’s done. That’s why the Model subclassing methodology is considered the most complex, and the most versatile.

Keep in mind, this versatility does come at a cost: With subclassed models, you are responsible foro Much Much more of the model’s logic. This means your potential for errors is also much higher. Because of this, you should expect to make a lot more errors, and a lot more debugging.

1.2 Mixing and Matching Different types of Keras models

Remember, in Keras we have 3 different ways of building models: Sequential, Functional, and model subclassing.

Just because you choose one of them, doesn’t lock you out of the others. All of the models in the Keras API can smoothly work with each other, whether they are Sequential, Funciton, or subclassed models written from scratch.

At the end of the day, they are all part of the same spectrum of workflows. Let’s make a subclassed model that includes a functional API model:

inputs = keras.Input(shape=(64,))

outputs=keras.layers.Dense(1,activation='sigmoid')(inputs)

binary_classifier=keras.Model(inputs=inputs,outputs=outputs)

class MyModel(keras.Model):

def __init__(self, num_classes=2):

super().__init__()

self.dense=keras.layers.Dense(64,activation='relu')

self.classifier=binary_classifier

def call(self, inputs):

features = self.dense(inputs)

return self.classifier(features)

model = MyModel()1.3 Sequential, Functional, and Subclassing summary

The Sequential API is the most straightforward way to build a Keras model. It's ideal for Simple Feedforward Networks like MLPs or straightforward CNN architectures where the flow is strictly from input to output without branching or merging.

When to use:

Use Sequential when your model architecture is strictly linear and does not include any layers with multiple inputs or outputs.Advantages:

Simple and readable syntax.

Fast prototyping for simple models.

Disadvantages:

Not suitable for models with complex topologies.

Difficult to adapt or extend once the model becomes slightly non-linear.

The Functional API offers a more flexible way to define models. It is great when dealing with non-linear topologies like models with branches, skip connections, and multiple inputs or outputs.

When to use:

Use Functional when you need to build more complex architectures such as ResNet, Inception, multi-task learning models, or encoder-decoder architectures where layers might need to share inputs or outputs, or where intermediate outputs are reused.Advantages:

Layers are connected using tensors, providing transparency and inspectability.

Disadvantages:

Requires careful wiring of tensors to ensure correctness, which may be prone to errors in deeply branched architectures.

The Model Subclassing API gives you maximum control and flexibility, allowing you to subclass keras.Model and override the __init__() and call() methods. This makes it the go-to for research and advanced custom architectures.

When to use:

Use subclassing when you need dynamic behavior in your model's forward pass, or when building a highly customized model architecture.Advantages:

Complete freedom to define the model's forward pass.

Ideal for models requiring dynamic computation (e.g., RNNs with variable time steps, conditionally routed networks).

Disadvantages:

Limited automatic model inspection (ie no .summary() until the model is built or called).

Much more complex debugging due to lack of graph visualization.

For us, we’ll primarily use the functional API methodology as it’s in that sweet middle spot of complexity, and capability.

2 - Additional Keras Utilities

2.1 Metrics

Metrics are key to measuring the performance of your model, specifically, to measuring the difference between its performance on the training data, and it’s performance on the test data.

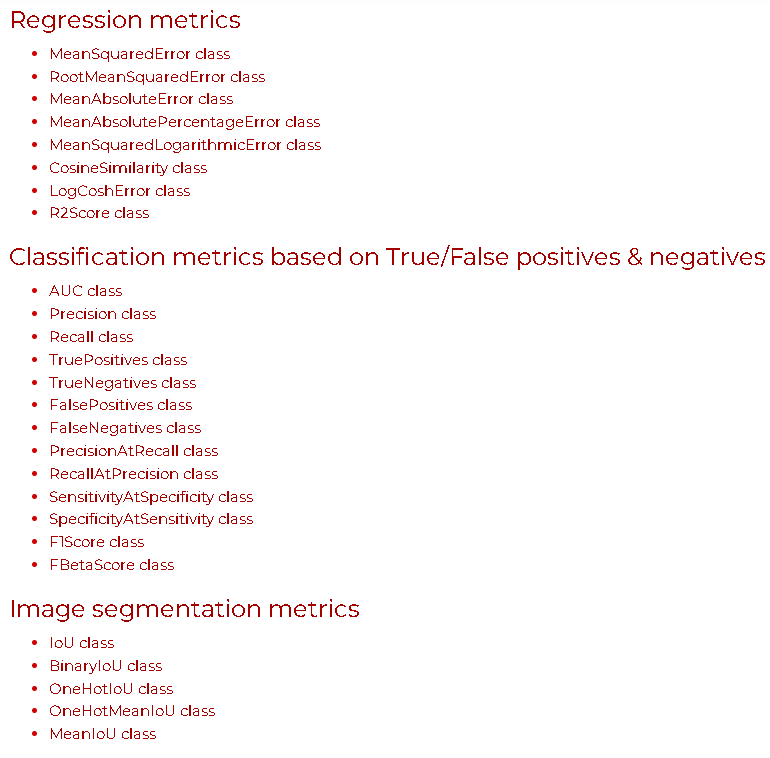

Commonly used metrics for classification, and regression are already part of the built-in keras.metrics module, and most of the time that’s what you will use. Here are some of the easily available metrics from keras.

But, if you want to make your own, you can do so, using the keras.metrics.Metric class. Similar idea to how we did the subclassing models.

2.2 Callbacks

Launching a training run on a large dataset for hundreds of epochs using model.fit() can sort of be like launching a paper airplane… After the initial push, you have absolutely 0 control over what happens. But… what if there was a way to control it, even after it’s been launched?

That’s basically what callbacks are, but for neural networks.

A callback is an object, that is passed to the model in the .fit(), and that is called by the model at various points during training. It has access to all the available data about the state of the model, and it’s performance, and can take action such as:

interrupt training

save a model

load a different weight set

or do something else, user specified

Here are a few ways that you can use callbacks:

Model checkpointing: Save the current state of the model at different points during training

Early stopping: interrupt training: when the validation loss is no longer improving

Dynamically adjust parameter: like the learning rate of the optimizer

To run an out of the box callback, you can use keras.callback, here’s a screenshot to see what is available

That is all for now, next post, we’ll start on how to use deep learning for computer vision (remember, we’ll primarily be using the functional API to build these models)