Intro to Deep Learning for NLP

a brief history and how to prep our data

In comp-sci, we refer to human languages like English, French, or German as “natural” languages to seperate them from languages that were designed for machines (Assembly, LISP, XML).

Every machine language was designed; its starting point was a human engineer writing down a set of formal rules to describe what statements you could make in that language and what they meant. Rules came first, and people only started using the language once the rule set was complete.

With human language, it’s the reverse; usage comes first, rules come later. natural language was shaped by an evolution process, kinda like biological organisms, that’s what makes it “natural”.

With grammar rules of English, they were typically formalized after the fact, and are often ignored or broken by it’s users.

History of NLP in computing

Here is a brief history of how the approach to tackling NLP has changed over time.

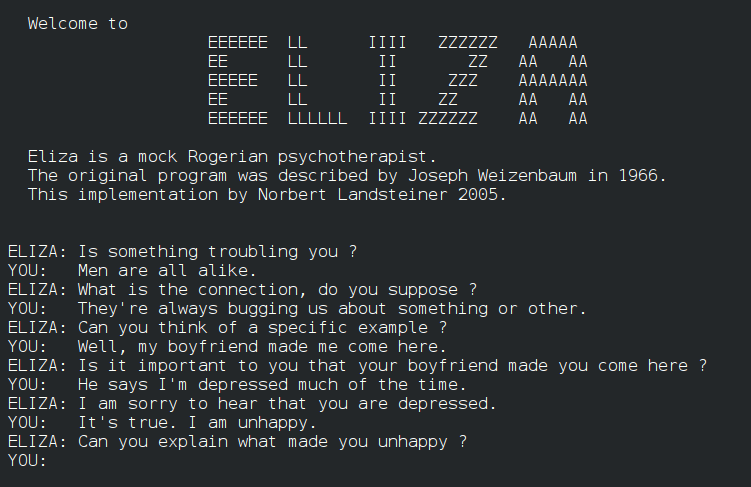

ELIZA shows the limits of patterns (1960s).

Joseph Weizenbaum’s ELIZA mimicked a psychotherapist using simple pattern matching and scripted responses. It felt clever because humans fill in the gaps, but there was no understanding under the hood. ELIZA became the canonical example of how far you can get with templates and how quickly you hit a ceiling.

1950s - 1970: Hand-built rules dominate

Early NLP systems were written by linguists and programmers who encoded grammar by hand: tokenizers, morphological analyzers, part-of-speech rules, and context-free parsers. Machine translation projects in the 1950s–60s, expert systems in the 1970s, and grammar formalisms all followed this pattern. You wrote the rules first, then the machine applied them.

1980s: Hardware improves and the question changes

With more compute and storage available, engineers began to ask a different question: instead of hand-writing every rule, can the machine learn them from data on it’s own? In speech, this led to probabilistic models like Hidden Markov Models and n-gram language models trained on audio and text corpora. The idea was pragmatic: let statistics decide which sequence is most likely, rather than arguing about the “right” rule.

Here’s a video on n-grams if you are curious

The statistical turn becomes mainstream (1990s).

Faster CPUs tipped the balance toward data-driven methods. The IBM speech and MT groups popularized maximum-likelihood training, EM, and n-gram modeling; resources like the Penn Treebank made supervised learning practical.

Frederick Jelinek captured the mood with a sharp one-liner: “Every time I fire a linguist, the performance of the speech recognizer goes up.” Basically he said: If your rules disagreed with the data, the data usually won.

Preparing text data

Deep Learning models can only process numeric tensors; they cannot take raw text as input. Vectorizing text is the process of transforming text into numeric tensors. Text vectorization processes come in many shapes & forms, but they all generally tend to follow this template:

First, you standardize the text to make it easier to process, such as by converting it to lower case or removing punctuation

You split the text into units (called tokens), such as characters, words, or groups of words. This process is called tokenization.

You convert each token into a numerical vector. This will usually involve first indexing all tokens present in the data

Let’s walk through an example together to see how this process plays out:

Raw Text: “The cat sat on the mat”.

After we apply Standardization, it would look something like this:

Standardized text: “the cat sat on the mat”

After we apply the process of tokenization, it would look something like this:

Tokens: “the”, “cat”, “sat”, “on”, “the”, “mat”

Lets say our data had plenty of words, and each word was linked to a number, after indexing, it would look something like this:

Token indices: 3, 1, 4, 9, 3, 117

and of course, for our Deep learning model to actually read our data, we have to do 1-hot encoding to it, and it might look something like this

0,1,0,0,0,0

0,0,0,0,0,0

1,0,0,0,1,0

0,0,1,0,0,0

0,0,0,0,0,0And voila, that’s how you can take a sentence, and turn it into usable data for our ML model to read.

Text Standardzation

Let’s focus entirely on text standardization first. Consider these 2 sentences:

sunset came. i was staring at the Mexico sunset. Isnt nature dope af??????

Sunset came; I started at the México sunset. Isn’t nature dope af?

The 2 sentences are very similar… actually they are basically saying the same thing. But, if you were to convert them to byte strings, they would end up with very different representations. For example: “i” is not the same as “I”. “e” is not the same as “é”

Text standardization is a basic form of feature engineering that aims to erase encoding differences that you don’t want your model to have to deal with. It’s not exclusive to ML either, you’d basically have to do the same thing if you were building a search engine too.

One of the simplest and most widely used standardization schemas is to “convert to lowercase and remove punctuation characters”. If we implement that, our sentences become:

sunset came i was staring at the mexico sunset isnt nature dope af

sunset came i started at the méxico sunset isn’t nature dope af

As you can see, they are started to get closer. Another common transformation is to swap special characters with their normal English counterparts, for example è & é => e. î becomes i, and so on….

Lastly, a much more advanced standardization pattern that is more rarely used in a machine learning context is called “stemming”. It’s the process of converting variations of a term (such as different conjugated forms of a verb)) into a single shared representation, like turning “caught”, “been caught” to “[catch]”.

When we apply stemming to our 2 sentences, they finally end up becoming the exact same sentence:

sunset came i [stare] at the mexico sunsest isnt nature dope af

Text splitting (tokenization)

A “token” is just a unit of text your model will treat as one symbol: it could be a word, a subword fragment, a character, or even a byte. Choosing the right unit matters because it controls vocabulary size, how often you hit “unknown” tokens, and how much context your model sees at once.

Let’s reuse the two standardized sentences from before:

sunset came i was staring at the mexico sunset isnt nature dope af

sunset came i started at the méxico sunset isn’t nature dope af

The simplest splitter: whitespace

If we split on spaces, we get word-like units:

["sunset","came","i","was","staring","at","the","mexico","sunset","isnt","nature","dope","af"]

["sunset","came","i","started","at","the","méxico","sunset","isn’t","nature","dope","af"]

This is fast and easy to reason about. The downside is obvious: tiny spelling or accent differences create different tokens (“mexico” vs “méxico”, “isnt” vs “isn’t”), and you’ll see lots of rare words that the model has to memorize.

Punctuation-aware word tokenization

A small step up is to split off punctuation and normalize contractions in a consistent way. For example, you might map curly apostrophes to straight ones, then split isn’t into isn + ' + t or into isn’t as a single token depending on your rules. The goal is to make “isn’t” and “isnt” line up, or at least get closer than before. This reduces accidental sparsity without throwing away signal.

Voila, now we know how to get our data ready, next post, we’ll run an actual NLP task with deep learning.

Great post. I’ve done similar work mapping disease triggers in Digestive Data, so I really appreciated how clearly you explained the evolution of NLP.