Introduction To Stable Diffusion

Written by: BowTiedMourningDove. Focuses on Stable Diffusion, and how to perform fine tuning.

I was going to cover Neural Networks more, after the “How to Decensor Anime Girls” application of Convolutional Neural Networks. However, since I only work with Neural Networks here and there for niche tasks, I’d rather you guys learn from a guy who works with them on a daily basis, and has alpha you can deploy.

BowTiedMourningDove focuses primarily on Deep Learning.

Table of Contents

BowTiedMourningDove

Stable Diffusion

Fine Tuning

1 - BowTiedMourningDove

Hello! I am BowTiedMourningDove. I currently work in MLOps for a generative AI startup and aim to become a bonafide MLE as well. I handle parts of the ML pipeline focusing on training neural networks. I also focus on performing research about AI trends and academic literature.

As I scale into this role, the plan is for me to take ownership of the pipeline, and continue growing it. This pipeline is as follows:

Data Sourcing

Data Gathering

Data Wrangling

Dataset Buildout

Data Processing

Training

Model Upload (Repo construction)

Endpoint Creation

Deploying to Production

I did not study this at all in college (I made the mistake of studying the “social sciences”) or go to graduate school at all. I am self-taught in everything about computer science, and mathematical concepts related to AI/ML.

2 - Stable Diffusion

2.1 Latent Diffusion Model

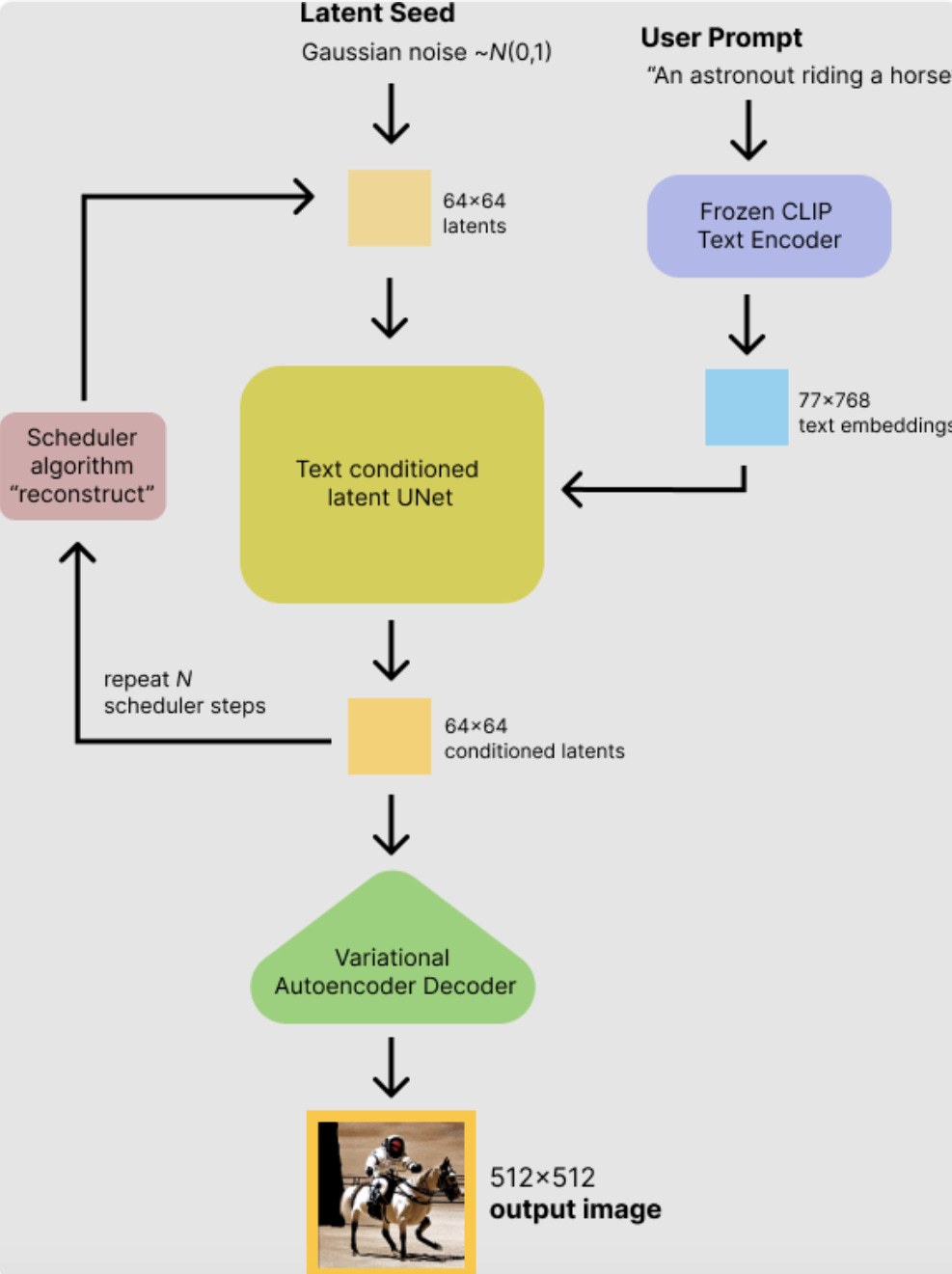

I will now intro Stable Diffusion - the main generative AI system I work with on a daily basis. Stable Diffusion is a collection of neural networks that are woven together to produce novel images. Meaning, the model creates a fresh, and new image based on a text prompt - that is generated by its architecture. Here is a diagram of its architecture:

There is a ton going on here, and I will not break down this entire diagram. The original LDM paper released April 2022 does that better than I can. There is a great deal of complex mathematics that lies at the core of each piece of this system - not just linear algebra.

A a graduate degree level of understanding in mathematics is not necessary to understand what is going on here, and work with it. At a very high level, what this diagram shows is a text-image pair being tokenized and encoded for the model to learn from, in latent space.

You can see an example of a text image pair below:

The text-image pair moves from pixel space, into latent space, where the U-net can perform convolutions to remove noise. Then, decode an image on the other end back into pixel space. Since the process of Diffusion is taking place in latent space (latent space is representational and hidden), this whole operation is known as “Latent Diffusion” making Stable Diffusion a Latent Diffusion Model (LDM).

2.2 Stable Diffusion

Here are the model files for Stable Diffusion, from the StabilityAI HuggingFace repo:

As you can see, we have a bunch of folders and some individual files down below. The folders are what we will focus on for now, as those are what hold the neural nets - “VAE”, “Unet”, and “Text_Encoder”. The VAE (variational autoencoder) handles the encoding and decoding of the image into and from latent space. The Text Encoder (a model known as CLIP) handles all text encoding into latent space, where it is embedded along with the image. Finally, the Unet, a convolutional neural network, is responsible for removing noise in latent space. The removal of noise in latent space is what delivers a synthesized image.

This is a high level overview of how the model functions.

You can even watch this video below for more details:

What I described about Stable Diffusion is for the training process. It needs to see text paired with images to develop an understanding of the relationship between text and images. This lets it figure out what exactly is in each image. The training process is the model learning a text-image pair and then guessing how much noise needs to be removed to produce that image.

This training process occurs along a Markov chain. The model reverses noise it applied at the beginning of the forward diffusion process. The step-loss during training reflects how correct the model is at removing noise to produce the correct image. This is validated by the test set of data.

2.3 Inference

You can watch this video below if you need a refresher on Markov Chains.

Inference is the process of a user inputting fresh text to get a brand new result from the model. Inference does not deal with text-image pairs like training does. Inference is the model inferring what a result should be based on probabilities derived from the dataset it was trained on.

The model does “inference” during training too, but in a different context. It is learning at the same time, and again, has a text-image pair to condition on. During inference there is no pair, the text prompt and then the brand new generated image. A large part of the complex mathematics I referenced earlier is probability theory and in the case of LDMs: Markov chains.

Here is a straightforward diagram of the model logic during inference:

3 - Fine Tuning

Stable Diffusion is not perfect, it can make serious errors for example:

Ignoring user prompt

Have issues understanding parts of a prompt

Completely botch details of an image/setting

Something that can be done to overcome limitations of the model is to perform “fine-tuning”. This is a training process that can be executed with a few lines of code. If you want Stable Diffusion to be better at generating television screens, you would get a bunch of text-image pairs of television screens and fine-tune Stable Diffusion on this data so it can be better at generating TV screens.

Lets say you write a blog on electronics and need stock photos for your site - you heard about stable diffusion. Instead of getting generic images from the internet, you can get stable diffusion to make cool photos of electronics - but you notice it can’t do TV screens that well. You could fine-tune on TV screens like I mentioned and get better, business ready results immediately for the photos you want to put on your blog.

Fine-tuning requires access to computational resources and a proprietary dataset. The fine-tuning process is quite easy to do with HuggingFace code, APIs, and all their modules/packages/libraries. They released capabilities with Javascript and Typescript as I was writing this post, but all this work is done with Python. There is some capability built out with the family of C languages but I am uncertain.

I work with Python.

For fine-tuning Stable Diffusion, it comes down to loading a unique dataset like this (there are many ways to do it, but this is a straightforward way for completing the necessary steps locally):

from datasets import load_dataset

dataset = load_dataset("imagefolder", data_dir="/path/to/folder", drop_labels=False)Then, upload the proper training script and run a training session (in this manner) with the following hyperparameters:

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

export TRAIN_DIR="path_to_your_dataset"

export OUTPUT_DIR="path_to_save_model"

accelerate launch train_text_to_image.py \

--pretrained_model_name_or_path=$MODEL_NAME \

--train_data_dir=$TRAIN_DIR \

--use_ema \

--resolution=512 --center_crop --random_flip \

--train_batch_size=1 \

--gradient_accumulation_steps=4 \

--gradient_checkpointing \

--mixed_precision="fp16" \

--max_train_steps=15000 \

--learning_rate=1e-05 \

--max_grad_norm=1 \

--lr_scheduler="constant" --lr_warmup_steps=0 \

--output_dir=${OUTPUT_DIR}This is a broad overview of fine-tuning. There are many other steps and configurations involved - an entire series of posts could be written on fine-tuning. But, these two pieces are the core: 1) load a dataset 2) run the fine-tune with the proper training script and hyperparameters on a SD model. HuggingFace is completely open source so all the training scripts to use are there.

Fine-tuning is not as resource intensive as training an entire cost-prohibitive model such as Stable Diffusion. It cost about 600k to train Stable Diffusion and it took awhile (about 150k gpu hours). The average data scientist cannot train a model the size of Stable Diffusion in this fashion, but a data scientist could fine-tune SD2 on a valuable dataset for their use case in a matter of hours.

Those are all of the guest posts I have for now.

Will be going back to finishing up SQL, then moving onto Data Skills.

Excellent post gentlemen. Well done.