MLOps 14: Deployment on Azure, with BowTiedAzure

MS Azure & CLI, Deployment with GitHub Actions, Azure Machine Learning Service

MS Azure is popular, and if you are working for a company that is heavy on the Microsoft suite of products, the odds are you’ll be doing deployment on MS Azure. Luckily, we have

here to help out.Table of Contents

MS Azure & CLI

Deployment with GitHub Actions

Azure Machine Learning Service

1 - MS Azure & CLI

Microsoft Azure is a cloud computing service created by Microsoft for building, testing, deploying, and managing applications and services through Microsoft-managed data centers. It provides a range of cloud services, including those for computing, analytics, storage, and networking. In this section, we'll explore the initial steps to get started with Azure for deploying Machine Learning (ML) models.

1.1 Understanding MS Azure

Azure offers a wide range of cloud services, supporting various programming languages, frameworks, and tools, including both Microsoft-specific and third-party software and systems. For companies involved in MLOps, Azure provides robust, scalable cloud infrastructure, powerful data analytics, and machine learning services that can be leveraged to deploy ML models efficiently.

While Google Cloud and other services offer similar functionalities, there are several reasons why companies might prefer Azure:

Integration with Microsoft Products: For companies already using Microsoft products like Office 365 or Windows Server, Azure provides seamless integration, making it a convenient option.

Hybrid Capabilities: Azure offers strong support for hybrid cloud environments. This is beneficial for companies looking to leverage both on-premises and cloud resources.

Enterprise Focus: Azure has a strong emphasis on enterprise needs, offering a broad set of compliance offerings, integrated security tools, and industry-specific solutions.

AI and ML Services: Azure provides comprehensive tools and services for AI and machine learning, making it an attractive platform for deploying ML models.

1.2 Azure CLI

The Azure Command-Line Interface (Azure CLI) is a set of commands used to manage Azure resources. It provides a way to do your tasks through your Azure account from the command line or through scripts. This makes it a versatile tool for automating cloud workflows.

Here’s how you create and account & get started

Step 1: Create an Azure Account

Visit the Azure website: Go to the Azure Portal.

Sign up for an account: Click on “Create a Microsoft Azure account” and follow the prompts. You'll need to provide some basic information and may need to verify your identity with a phone number.

Choose Your Subscription: Azure offers various subscription types, including pay-as-you-go and free options. Select one that fits your needs.

Step 2: Installing Azure CLI

Azure CLI can be installed on Windows, macOS, and Linux environments. Here's how you can install it:

Windows:

Download the MSI installer from the Azure CLI installation page.

Run the installer and follow the instructions.

macOS:

The easiest way to install Azure CLI on macOS is using Homebrew:

brew update && brew install azure-cli

Linux:

For most Linux distributions, you can install Azure CLI using the package manager. For Ubuntu/Debian:

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

Step 3: Log in via Azure CLI

Once installed, open your command line or terminal and log in to your Azure account:

az loginThis command will open a browser window where you can sign in using your Azure credentials.

2 - Deployment with GitHub Actions

2.1 GitHub Actions

In the rapidly advancing field of MLOps, automating the deployment process is crucial for efficiency and scalability. GitHub Actions, in tandem with Microsoft Azure, offers a powerful way to automate the deployment of machine learning models. This section delves into using GitHub Actions for deploying an ML model to Azure. This will cover each necessary component in detail.

GitHub Actions is a CI/CD platform that automates your build, test, and deployment pipelines directly within your GitHub repository. It allows you to create workflows that are triggered by GitHub events like push, pull requests, or issues.

2.2 Azure Container Registry (ACR)

Azure Container Registry (ACR) is a managed Docker registry service based on the open-source Docker Registry 2.0. It's used for storing and managing private Docker container images. This is especially useful in environments where deploying from a public registry isn't suitable.

2.3 Deploying a ML model on MS Azure with GitHub Actions

Creating an ML application for deployment involves several key files. Each sof these files serve a specific purpose. Below, we’ll outline these components with sample code, illustrating how they collectively form a deployable ML application.

1 - Preparing your ML application

main.py: FastAPI Application

This is your primary application file. Let’s assume you have a simple ML model that predicts based on input features.

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

import joblib

# Load your trained model

model = joblib.load("model.joblib")

# Define a data model for input data validation

class InputData(BaseModel):

feature1: float

feature2: float

app = FastAPI()

@app.post("/predict")

async def make_prediction(data: InputData):

try:

prediction = model.predict([[data.feature1, data.feature2]])

return {"prediction": prediction[0]}

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))In this main.py, we define a FastAPI application with a /predict endpoint that expects data in a specific format and uses the loaded ML model to make a prediction.

requirements.txt: Python Dependencies

fastapi==0.65.2

uvicorn==0.13.4

joblib==1.0.1

scikit-learn==0.24.2This file lists all the dependencies required by your application, ensuring that these packages are installed in your deployment environment.

Dockerfile: Containerization of Your Application

FROM python:3.8

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "80"]The Dockerfile specifies how to build a Docker image for your application. It sets up the environment, installs dependencies, and determines what command to run when the container starts.

docker-compose.yml: Multi-Container Docker Setup

version: '3.8'

services:

web:

build: .

ports:

- "80:80"This file defines how your Docker containers should be built and run, including port mapping from the container to the host.

pytest.ini: Pytest Configuration

[pytest]

minversion = 6.0

addopts = -ra -q

testpaths =

testsThis configuration file sets the pytest options and specifies the path to your test scripts.

test.py: Testing Your Application

from fastapi.testclient import TestClient

from main import app

client = TestClient(app)

def test_read_predict():

response = client.post("/predict", json={"feature1": 1.5, "feature2": 2.5})

assert response.status_code == 200

assert "prediction" in response.json()Here, test.py contains tests for your FastAPI application, ensuring that the /predict endpoint behaves as expected.

start.sh: Script to Start the Application

#!/bin/sh

# Start the FastAPI application

uvicorn main:app --host 0.0.0.0 --port $PORTstart.sh is a simple shell script to start your FastAPI application, which can be particularly useful in container environments where you might need to configure the startup command.

2 - Setting Up Azure Container Registry

Azure Container Registry (ACR) is an essential component in the deployment pipeline for hosting Docker container images. It's particularly useful for storing the images of your machine learning models and the corresponding application in a private, secure registry. Here's how to set it up:

Step-by-Step Guide to Setting Up ACR

Create an Azure Account: If you haven’t already, sign up for an Azure account at Azure Portal.

Navigate to the Container Registry Service:

Once logged in, go to the Azure portal dashboard.

Click on “Create a resource” and search for “Container Registry.”

Create a New Container Registry:

Click on “Create” in the Container Registry section.

Fill in the required details:

Subscription: Choose your Azure subscription.

Resource Group: Select an existing resource group or create a new one.

Registry Name: Choose a unique name for your container registry.

Location: Select the region closest to you or your users.

SKU: Choose a pricing tier (Basic, Standard, or Premium). Basic is sufficient for most small to medium applications.

Review and create the registry.

Configure the Registry:

Once the registry is created, go to its overview page.

Enable the admin user. This is necessary for authenticating Docker to push images.

Note the login server name; it typically follows the pattern

yourregistryname.azurecr.io.

Authenticate with the Registry Locally:

To push images from your local machine, you need to authenticate with Azure CLI or Docker CLI.

Using Azure CLI:

az acr login --name yourregistryname

Pushing a Docker Image to the Registry:

After building your Docker image, tag it with the registry's login server name:

docker tag myimage yourregistryname.azurecr.io/myimage:v1

3 - Creating GitHub Actions Workflow for MS Azure

GitHub Actions is an automation tool that enables you to set up CI/CD pipelines directly in your GitHub repository. In the context of deploying machine learning models, a GitHub Actions workflow can automate processes such as testing, building Docker images, pushing them to Azure Container Registry, and deploying to Azure App Service. Here’s how to set up a GitHub Actions workflow for your ML project.

Step-by-Step Guide to Setting Up the Workflow

Create Workflow Configuration File:

In your GitHub repository, create a directory named

.github/workflowsif it doesn't already exist.Create a new file in this directory, e.g.,

ml-deploy.yml. This file will contain the configuration for your workflow.

Define Workflow Triggers:

At the start of your

ml-deploy.yml, define the events that should trigger the workflow. Common triggers include pushing to specific branches or creating a pull request.Example:

name: ML Model CI/CD Pipeline on: push: branches: [ main ] pull_request: branches: [ main ]

Define Jobs and Steps:

Organize your workflow into jobs. Each job can have multiple steps that execute commands like testing your code, building a Docker image, or deploying to Azure.

Example structure:

jobs: build-and-deploy: runs-on: ubuntu-latest steps: - name: Checkout Repo uses: actions/checkout@v2 - name: Set up Python uses: actions/setup-python@v2 with: python-version: '3.8' - name: Install Dependencies run: | pip install -r requirements.txt - name: Run Tests run: | pytest - name: Build and Push Docker image to Azure Container Registry run: | docker build -t ${{ secrets.ACR_NAME }}/myapp:${{ github.sha }} . docker push ${{ secrets.ACR_NAME }}/myapp:${{ github.sha }} - name: Deploy to Azure App Service uses: azure/webapps-deploy@v2 with: app-name: 'my-web-app' slot-name: 'production' publish-profile: ${{ secrets.AZURE_WEBAPP_PUBLISH_PROFILE }} images: '${{ secrets.ACR_NAME }}/myapp:${{ github.sha }}'

Configure Secrets for Azure:

In your GitHub repository settings, go to Secrets and add the necessary Azure credentials. These might include the Azure Container Registry name (

ACR_NAME), Azure subscription ID, and a publish profile for Azure App Service (AZURE_WEBAPP_PUBLISH_PROFILE).Secrets help securely store sensitive information required for your workflow.

Test the Workflow:

Once you have committed the workflow file to your repository, any subsequent push or pull request (based on your defined triggers) will automatically initiate the workflow.

Monitor the workflow runs in the ‘Actions’ tab of your GitHub repository to ensure they execute successfully.

Congrats, you have now successfully deployed a Machine Learning Model on MS Azure.

3 - Azure Machine Learning Service

Azure Machine Learning is a full AS A SERVICE offering on Azure for managing the full MLOPs lifecycle. Because it is delivered as a service, you do not have to install and/or manage any individual components of the machine learning lifecycle. Because it covers the entire lifecycle, you can train, deploy, and manage your machine learning models without relying on any additional software or services.

Being Azure based, there is also deep integration with other Azure service offerings.

How do you interact with Azure Machine Learning?

AML has several methods available to use:

Azure Machine Learning Studio – a web based visual platform

Python SDK

Azure CLI

Rest APIs

Which you choose really depends on your workflow preference and which you are most skilled in (or in some cases what your app demands).

The studio is typically the easiest way to start interacting with AML. Using the studio, you can run your code via Jupyter Notebooks and there are even drag and drop options for deploying models without writing any code. The visualizations within studio also give you an easy way to see outcomes and metrics of your project.

Getting Started with Azure Machine Learning

Before you can start you need a workspace. You will sign in directly to Azure Machine Learning Studio via the URL: https://ml.azure.com

If this is your first time here, you will need to enter info to create a workspace else you can access the workspaces you or others have already created.

You’ll next need to give your self some compute. You will do this by clicking on Notebooks in the left panel and then clicking the create compute button. There is a lot that goes in to picking the correct compute for your project but it can be a big subject. For now, you can pick something cheap. You could also potentially use serverless Spark pools here but don’t worry about that unless you know you need Spark.

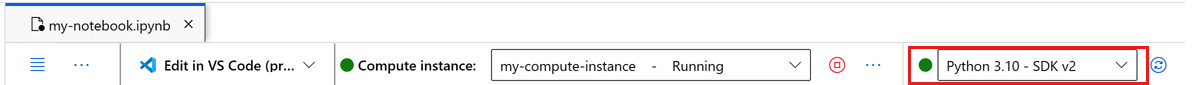

Once you have your compute, you can then open a notebook to start your work. Before you begin writing code, you will need to make sure your compute is started

Also, you want to make sure you pick the correct kernel for your project

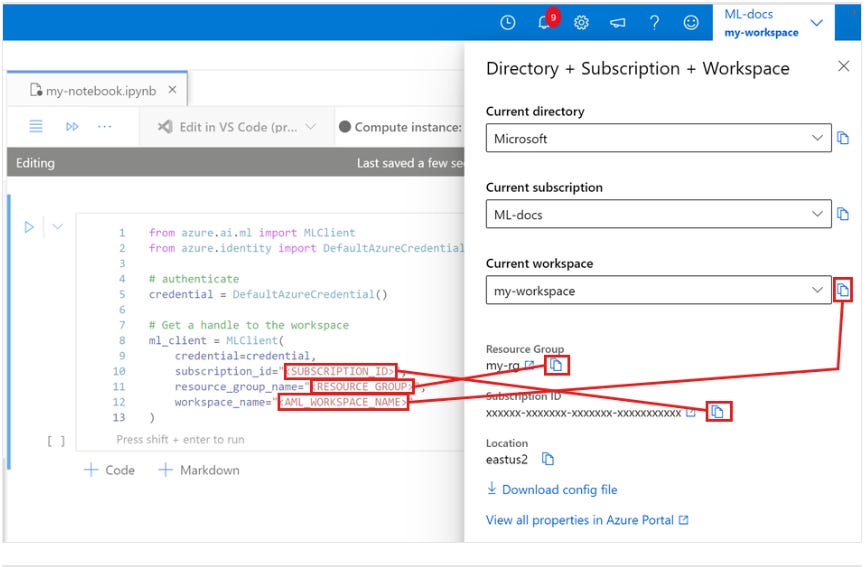

One last thing you will need to do is create a way to reference your workspace. Your workspace represents the top level of the hierarchy for AML. A quick way to do this:

from azure.ai.ml import MLClient

from azure.identity import DefaultAzureCredential

# authenticate

credential = DefaultAzureCredential()

SUBSCRIPTION="<SUBSCRIPTION_ID>"

RESOURCE_GROUP="<RESOURCE_GROUP>"

WS_NAME="<AML_WORKSPACE_NAME>"

# Get a handle to the workspace

ml_client = MLClient(

credential=credential,

subscription_id=SUBSCRIPTION,

resource_group_name=RESOURCE_GROUP,

workspace_name=WS_NAME,

)You can get your Subscription_ID, Resource_group, and AML_Workspace_Name from here:

Test your handle like this to make sure it works:

# Verify that the handle works correctly.

# If you get an error here, change your SUBSCRIPTION, RESOURCE_GROUP, and WS_NAME in the previous cell.

ws = ml_client.workspaces.get(WS_NAME)

print(ws.location,":", ws.resource_group)From here, you can start doing the machine learning that you know and love using the Jupyter Notebooks in your workspaces. AML can support the following languages and frameworks:

PyTorch

TensorFlow

scikit-learn

XGBoost

LightGBM

R

.NET

What else can you do with AML?

A LOT. Remember, this service is a full lifecycle management solution for training, operation, and MLOps. There is extensive documentation Microsoft keeps for integrating this into your operations that you should check out.