MLOps 18: Monitoring with Prometheus & Grafana

Essential ML Monitoring Practices, Metrics to monitor, Drifts in ML, Prometheus & Grafana, Using prometheus & grafana

At this point, we’ve covered basically every single major way to deploy your ML models in current meta. AKA 99% of you reading this will use one of the Deployment methods discussed in the previous few posts.

But, after deployment, the next thing we do is ensure that things are running smoothly. Let’s talk about it:

Table of Contents

Essential ML Monitoring Practices

Metrics to Monitor

Drifts in ML

Prometheus & Grafana

Using Prometheus & Grafana

1 - Essential ML Monitoring Practices

In the realm of Machine Learning Operations (MLOps), monitoring ensures the health and performance of ML models in production. The importance of monitoring starts from early detection of issues. This is to ensure the model can explain predictions. Let's explore the fundamentals of effective ML monitoring

1.1 Importance of Monitoring

Early Detection of Issues:

Monitoring allows for the early identification of issues, from model performance degradation to data pipeline failures. Early detection enables quicker responses, reducing the risk of significant impact on end-user experience or operational efficiency.

Resource Usage Tracking:

Keeping tabs on computational resources, such as CPU, memory, and storage utilization, is essential for cost-effective operations. Monitoring these metrics helps in optimizing resource allocation and scaling strategies.

Data Drift Detection:

Changes in data distribution over time, known as data drift, can adversely affect model performance. Monitoring for such changes is crucial to maintain the accuracy and reliability of ML models.

Explainability of Predictions:

As ML models increasingly influence decision-making, the ability to explain their predictions becomes vital, especially in sectors like healthcare and finance. Monitoring helps ensure models remain interpretable and their decisions justifiable.

1.2 Fundamentals of Good ML Monitoring

Functional Monitoring:

This involves monitoring the model's functional performance, such as accuracy, precision, recall, and F1-score. It ensures the model is performing as expected on the key metrics it was optimized for.

Operational Monitoring:

Focuses on the operational aspects of ML models in production, including monitoring system health, throughput, latency, and error rates. It's crucial for maintaining the overall health of the ML system.

Scalable Integration:

As ML systems scale, the monitoring system should seamlessly scale too. This requires a flexible and scalable integration of monitoring tools that can handle increasing loads and complexities.

Metrics Tracking and Storage:

Systematically tracking a wide array of metrics is essential. Storing these metrics in a structured manner, such as in a SQL database, enables deeper analysis and historical comparison.

Alert System:

An effective alert system is crucial for timely notifications about critical issues or anomalies detected in the ML system. This can range from automated email alerts to integration with incident management platforms.

2 - Metrics to Monitor

Effective monitoring in Machine Learning Operations (MLOps) involves keeping a close eye on a lot of metrics. These metrics provide important insights into the performance and health of the ML system. This is from the server level to the model's predictions. Understanding what to monitor is key. It is key to maintaining the robustness and reliability of ML deployments. Here’s an overview of the essential metrics to monitor in MLOps.

2.1 Server Metrics

Server metrics are fundamental in ensuring that the underlying infrastructure supporting the ML model is performing optimally.

Resource Usage: This includes CPU and GPU utilization, memory usage, and disk I/O. High resource utilization might indicate a need for optimization or scaling.

Availability: Measures the uptime of the server or service. High availability is crucial for ensuring that the ML models are accessible when needed.

Latency: The time it takes for the server to process a request. In ML scenarios, this might involve the time taken for a model to make a prediction. Lower latency is generally desirable.

Throughput: The number of requests that can be processed within a given time frame. Monitoring throughput is essential for understanding how well the system is handling the load.

2.2 Input Data Metrics

Monitoring the input data helps in ensuring that the model is receiving the right kind of data for making accurate predictions.

Model Version: Tracking which model version is currently in use can help in diagnosing issues and understanding performance changes.

Data Drift: Significant changes in data distribution compared to the training dataset can lead to reduced model accuracy. Monitoring for data drift helps in identifying when to retrain the model.

Outliers Detection: Identifying anomalous data points that are significantly different from the rest of the data. Outliers can adversely affect model performance if not handled properly.

2.3 ML Model Metrics

Model-specific metrics provide insights into how well the ML model is performing its intended task.

Classification Metrics: For classification models, key metrics include accuracy, precision, recall, F1 score, and ROC-AUC. These metrics help in understanding the effectiveness of the model in classifying data.

Regression Metrics: For regression models, important metrics are mean absolute error (MAE), mean squared error (MSE), and R-squared. These metrics indicate how close the model's predictions are to the actual values.

Predictions Monitoring: Tracking the predictions being made by the model, especially for real-time inference scenarios. This can help in quickly identifying issues with model performance.

Prediction Drift: Similar to data drift, but focuses on changes in the model’s predictions over time. This can be an indicator of model degradation or shifts in the underlying data patterns.

3 - Drifts in ML

In the field of Machine Learning Operations (MLOps), understanding and managing different types of drifts is pivotal. This is for the long-term success and reliability of ML models in production. Drifts in ML refer to changes that occur over time. This is within data, concepts, or model performance. This can significantly affect the accuracy and relevance of ML models. This section explores the three main types of drifts encountered in ML: Data Drift, Prediction Drift, and Concept Drift.

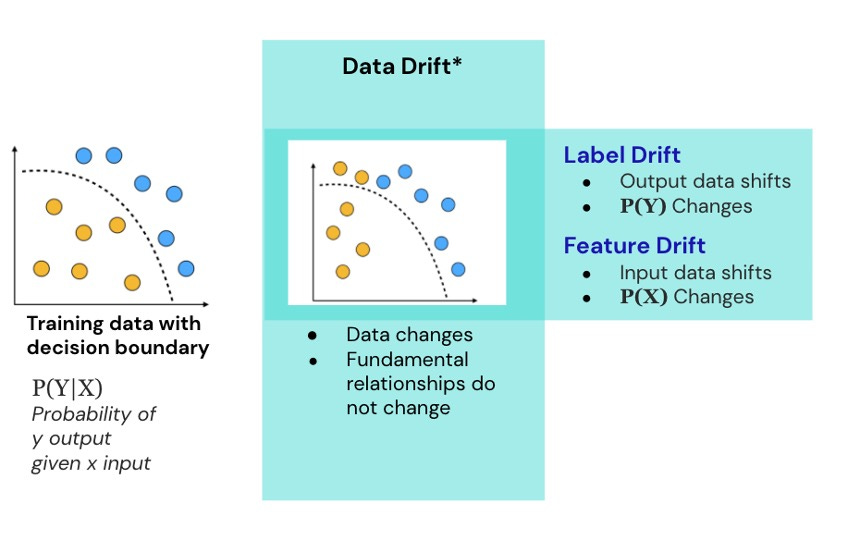

3.1 Data Drift

Data Drift occurs when the statistical properties of the model input data change over time. This can happen due to various factors like evolving trends, seasonal variations, or changes in user behavior.

Even if the model remains static, changes in input data characteristics can lead to decreased model accuracy and misaligned predictions. Regularly compare the distributions of incoming

data with the distributions of the data on which the model was trained. This can be achieved through statistical tests or visualization techniques.

Continuous monitoring is key. When significant data drift is detected, consider retraining the model with more recent data to realign it with the current data trends.

3.2 Prediction Drift

Prediction Drift refers to changes over time in the model's predictions, regardless of whether the input data has changed. This often highlights issues within the model itself or external factors influencing the predictions. It can lead to a gradual decline in model performance, as the predictions start deviating from the expected results.

Monitor key performance metrics like accuracy, precision, or recall over time. A decline in these metrics can indicate prediction drift. Investigate the root causes of the drift. This could involve examining the model's feature weights. It could also involve re-evaluating feature engineering or updating the model with new training data.

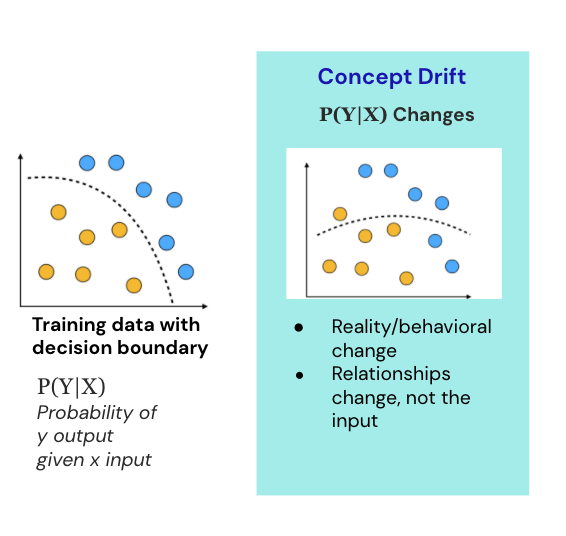

3.3 Concept Drift

Concept Drift happens when the relationship between the input data and the target variable changes. Unlike data drift, where the data distribution changes, concept drift implies a change in the patterns or concepts the model has learned. The model may become less accurate over time as the fundamental assumptions about the data relationships no longer hold true.

Detecting concept drift can be challenging, as it requires understanding the underlying data patterns and model behavior. Techniques like retraining models at regular intervals or using adaptive algorithms can help. Implement a system that can automatically trigger model retraining or adaptation when concept drift is suspected.

4 - Prometheus & Grafana

4.1 What is Prometheus

Prometheus is an open-source monitoring and alerting toolkit. It is widely recognized for its efficiency in recording real-time metrics in a time-series database. It's designed for reliability and scalability. It is primarily catering to the monitoring of microservices and containers.

Key Features:

Multi-Dimensional Data Model: Prometheus stores data as time series, identified by metric name and key/value pairs, making it highly effective for time-series data monitoring.

Powerful Query Language: It provides a flexible query language, PromQL, that allows for the retrieval and manipulation of time-series data.

No Dependency on Distributed Storage: The local storage of Prometheus is sufficient for most use cases, simplifying its deployment.

Multiple Modes of Graphing and Dashboarding: Prometheus itself offers basic graphing capabilities, but it is often used in conjunction with more powerful visualization tools like Grafana.

4.2 What is Grafana

Grafana is an open-source platform for monitoring and observability. This provides rich options for dashboard creation and data visualization. It complements Prometheus by interpreting and visualizing the data collected and stored by Prometheus.

Key Features:

Versatile Dashboards: Grafana offers customizable dashboards which can display a wide range of metrics, both from Prometheus and other data sources.

Advanced Visualization: It supports a variety of charts, graphs, and alerts, enabling users to create complex and informative visual representations of their data.

Alerting Functionality: Grafana can be configured to send alerts based on specific conditions in the monitored data.

Support for Multiple Data Sources: Beyond Prometheus, Grafana can integrate with a variety of data sources, making it a versatile tool for complex monitoring setups.

4.3 Why do we use them?

Here’s a quick summary of why we use them:

Real-Time Monitoring and Alerting: Prometheus and Grafana together offer the capability to monitor ML systems in real-time. They can track a wide range of metrics, from system performance (like CPU and memory usage) to more specific ML metrics (like throughput and latency of model predictions).

Customizable and In-Depth Insights: The combination allows for deep insights into the health of ML systems. Grafana’s visualization prowess, powered by Prometheus’s robust data collection, enables teams to pinpoint issues quickly and accurately.

Scalability and Flexibility: Both tools are designed to handle large-scale data, which is essential in MLOps as the data and model complexity grows. Their flexibility also means they can adapt to various monitoring requirements as ML systems evolve.

Enhanced Troubleshooting and Debugging: The detailed metrics and visualizations make it easier for MLOps teams to troubleshoot and debug issues. Teams can set up dashboards that specifically track the performance of ML models and their impact on the underlying infrastructure.

5 - Using Prometheus & Grafana

In the context of Machine Learning Operations (MLOps), setting up an effective monitoring system is important. It’s important for maintaining the performance and reliability of machine learning models. Prometheus and Grafana offer a powerful combination for monitoring ML pipelines.

It’s also important for setting up alerts. This section guides you through the process of using these tools. This includes the necessary Python and Docker configurations.

5.1 Setting Up Prometheus for monitoring

Instrumenting Python Code:

To monitor your ML pipeline, you first need to instrument your Python application with Prometheus. This involves exposing metrics from your application that Prometheus can scrape.

Python Code Example:

from prometheus_client import start_http_server, Summary import random import time # Create a metric to track time spent and requests made. REQUEST_TIME = Summary('request_processing_seconds', 'Time spent processing request') # Decorate function with metric. @REQUEST_TIME.time() def process_request(t): """A dummy function that takes some time.""" time.sleep(t) if __name__ == '__main__': # Start up the server to expose the metrics. start_http_server(8000) # Generate some requests. while True: process_request(random.random())This script creates a simple metric to monitor the time taken by a function. Prometheus can scrape this data from the server running on port 8000.

Configuring Prometheus:

Set up a Prometheus instance, either as a standalone server or in a container.

Prometheus Configuration:

Create a

prometheus.ymlfile to define the scrape configurations.Example:

global: scrape_interval: 15s scrape_configs: - job_name: 'python' static_configs: - targets: ['<PYTHON_SERVER_IP>:8000']Replace

<PYTHON_SERVER_IP>with the IP address or hostname of the server where your Python application is running.

5.2 Setting Up Grafana for Alerts

Grafana Configuration:

Deploy Grafana, which can also be run in a Docker container.

Once Grafana is up and running, access its web interface, usually at

http://<GRAFANA_HOST>:3000.

Adding Prometheus as a Data Source:

In Grafana's dashboard, add Prometheus as a data source, pointing to your Prometheus server URL.

Creating Dashboards:

Create dashboards in Grafana to visualize the metrics being collected by Prometheus.

You can create panels for different metrics, such as request times, error rates, or any custom metric defined in your Python application.

Setting Up Alerts:

Grafana allows you to set up alert rules on the metrics.

For example, you can create an alert for high request latency or error rates, which can notify you via email, Slack, or other notification channels.

5.3 Docker Configuration Changes

Dockerizing Prometheus:

Create a Dockerfile for Prometheus or use an official Prometheus image.

Mount the

prometheus.ymlfile to the container to provide the configuration.Docker Run Command:

docker run -d -p 9090:9090 -v /path/to/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

Dockerizing Grafana:

Use the official Grafana Docker image.

Docker Run Command:

docker run -d -p 3000:3000 grafana/grafana