MLOps 6: Challenges in Machine Learning Deployment

ML life cycle, Types of model deployment, Challenges in deploying models, MLOps

A Machine Learning Engineer who understands the entire machine learning cycle, and can operate it fully is worth their weight in gold. So, let’s talk about deploying your machine learning models.

Table of Contents

ML life cycle

Types of model deployment

Challenges in deploying models

MLOps

1 - ML Life cycle

The journey of deploying a machine learning model from conception to production requires careful planning, execution, and continuous monitoring. This lifecycle is not linear but cyclical, ensuring that models are iteratively improved. Let's delve deeper into each phase.

1.1 Business Impact

Purpose: To align machine learning projects with business goals. This ensures that models have a meaningful impact on organizational objectives.

Problem Articulation: Engage with stakeholders to understand the core business problem. Is it about improving customer retention or optimizing supply chain? Is it about detecting fraud, or something else?

Feasibility and ROI: Assess if ML is the right solution. Calculate potential return on investment considering costs like data acquisition, infrastructure, and maintenance.

Metrics and KPIs: Establish clear evaluation metrics. Whether it's accuracy, precision, recall, or custom metrics tailored to business goals.

1.2 Data Collection

Purpose: The foundation of any ML project. The breadth and quality of your data often predetermine the success of the model.

Data Needs Assessment: Understand the kind of data required. For a recommendation system, you'd need user behavior data, product data, historical transaction data, etc.

Data Sources: Identify where to get data. This could be internal databases or third-party data providers. It can also be public datasets, or even real-time data streams from IoT devices.

Volume and Variety: Recognize the size of the data. Are we looking at gigabytes or petabytes? Understand the variety: structured data like databases, unstructured data like images or texts, time-series data, etc.

Data Privacy and Compliance: Especially critical in sectors like finance and healthcare. Understand regulations such as GDPR or HIPAA that might affect data collection and processing.

1.3 Data Preparation

Purpose: Refine the raw collected data into a structured format suitable for feeding into ML algorithms.

Cleaning: Address missing values, outliers, and inconsistencies in the dataset.

Normalization and Standardization: Convert data to a common scale without distorting differences in ranges of values.

Data Splitting: Segment data into training, validation, and test sets, ensuring that each set is representative of the overall distribution.

1.4 Feature Engineering

Purpose: Enhance the data with additional variables (features) that could help improve model performance.

Domain Knowledge: Engage experts to understand which additional features might be relevant. For a real estate price prediction model, factors like proximity to amenities, public transport, or crime rates could be influential.

Dimensionality Reduction: Apply techniques like PCA when the number of features becomes too large, risking overfitting.

Feature Selection: Utilize algorithms to select the most relevant features and discard redundant ones.

1.5 Build and Train the model

Purpose: Apply algorithms to learn patterns from the data.

Model Selection: The vast majority of you will be using XGBoost. This is because it’s the current best algorithm

Hyperparameter Tuning: Optimize parameters that define the model's structure for better performance.

Training: Use the training dataset to allow the model to learn, adjusting its internal parameters to minimize error.

Keep in mind, that this step nowadays has mostly been automated. google offer’s the automl tool, and you can use auto-sklearn here as well.

1.6 Test & Evaluate

Purpose: Assess how the model performs on previously unseen data.

Validation: Use the validation set to fine-tune model parameters and prevent overfitting.

Evaluation Metrics: Depending on the business problem, metrics like F1-score, RMSE, or AUC-ROC might be more appropriate than just accuracy.

Business KPI Alignment: Ensure that the model's technical performance translates to business value.

1.7 Model Deployment

Purpose: Integrate the model into business processes to derive real-world value.

Deployment Strategies: Whether deploying on-premises, on cloud platforms like AWS or Azure, or on edge devices, the strategy should align with latency, scalability, and cost considerations.

Integration: Seamlessly integrate the model with existing IT infrastructure. This could involve setting up APIs, databases, or other middleware components.

Monitoring Setup: Establish tools to monitor model health and performance in real-time.

1.8 Monitoring

Purpose: Ensure the model remains relevant and efficient over time.

Performance Monitoring: As new data flows in, track how the model is performing. Is there a decline in accuracy or other metrics?

Model Drift: Stay alert for shifts in data distributions or in the environment that might render the model outdated.

Iterative Refinement: Periodically retrain or fine-tune the model, incorporating new data and feedback.

2 - Types of model deployment

Deploying a machine learning model into a real-world environment is a critical phase in the model lifecycle. The approach taken during this phase can greatly influence the model's performance, efficiency, and utility. With an increasing variety of deployment options at the disposal of machine learning practitioners, understanding the intricacies of each method becomes imperative.

2.1 Batch Predictions

Batch predictions refer to the process where a model processes an entire dataset collectively at predetermined intervals or triggers. Consider situations where real-time feedback is not mandatory, like monthly sales forecasts or daily inventory checks. The essence here is in aggregation and holistic views.

Pros & Cons:

Efficiency in Scale: Large volumes of data can be processed simultaneously.

Resource Management: As predictions are not real-time, computational resources can be optimally scheduled.

Latency: New data added after the last batch run won't be immediately processed.

2.2 REST API

By wrapping a model within a web service, it becomes accessible via the internet, facilitating communication with web applications, mobile apps, or other servers. This is ideal for situations where diverse platforms or applications require model access, like customer recommendations across web and mobile platforms.

Pros & Cons:

Flexibility: Easily integrates with various platforms and languages.

Scalability Concerns: Popular models might get overwhelmed by numerous concurrent requests.

Latency: Typically low, but network issues can introduce delays.

For scalability, consider containerizing your service using Docker and Kubernetes. Security should be paramount: always include authentication layers.

2.3 Mobile Devices

What is it? Deploying models directly onto devices, eliminating the constant server pings. This approach is the cornerstone of edge AI.

When to use it? Crucial for applications where data privacy is paramount or constant server communication is not feasible—think face recognition on phones or anomaly detection in offline factory equipment.

Pros & Cons:

Low Latency: Instant on-device predictions.

Data Privacy: Data doesn't leave the device, ensuring higher security.

Resource Constraints: Devices often lack the computational prowess of dedicated servers.

Considerations: The model's size and complexity should align with the device's capabilities. Regular updates become essential to keep the device's AI components relevant and efficient.

2.4 Real Time

As data streams in, it's instantly processed, with the model serving immediate predictions. This is perfect for scenarios demanding immediate decisions, like fraud detection during credit card transactions or real-time ad bidding.

Pros & Cons:

Immediate Feedback: Provides timely insights or actions.

Infrastructure Intensity: Requires robust backend infrastructure to manage and process data streams effectively.

Sensitivity to Failures: Any system hiccup can disrupt the immediacy.

3 - Challenges in deploying models

Transitioning a machine learning model from a research or development phase to a production environment is a monumental step. The model, which might have performed admirably in a controlled setting, is now exposed to the wild, unpredictable world of real-time data and varying user interactions. Here, we explore the intricate challenges that arise when deploying models in production and provide actionable insights to navigate these murky waters.

3.1 Data related challenges

Drifting Distributions: Unlike the static datasets used for training, real-world data might evolve over time. Economic, societal, or environmental changes can alter data distributions, affecting model performance.

Note: Use drift detection mechanisms to alert teams of significant data changes. Retraining the model with fresh data can be effective countermeasures. You can also leverage techniques like online learning

Mismatched Data Quality: Noise, missing values, or even new categories can appear in production data, which were absent during the training phase.

Note: Data validation pipelines can be established to check incoming data for anomalies or unexpected values, ensuring the model is only exposed to data of acceptable quality.

3.2 Other challenges

Portability: Models developed in one environment may not seamlessly operate in another due to library dependencies, hardware constraints, or even differing software versions.

Containerization tools like Docker encapsulate models along with their dependencies, ensuring consistent behavior across different deployment environments.

Scalability: A model might be initially deployed to cater to a few thousand requests but can later face millions as the user base grows. Adopt a microservices architecture, allowing models to run as independent services that can be easily scaled. Cloud platforms like AWS and Google Cloud offer auto-scaling features that adjust resources based on traffic demand.

Security: Models, especially in sensitive domains like finance or healthcare, become prime targets for malicious attacks. Encrypt data in transit and at rest. Employ API gateways with throttling to guard against Denial of Service (DoS) attacks. Regularly review and update security protocols.

Robustness: Real-world scenarios can introduce unexpected inputs leading to model failures or, worse, inaccurate predictions. Implement comprehensive logging and monitoring to detect and address anomalies swiftly. Unit tests, integration tests, and load tests should be routinely performed, simulating real-world conditions.

4 - MLOps

4.1 Decoding MLOps

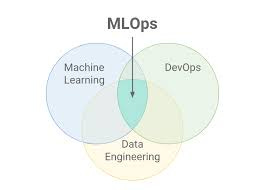

MLOps = Machine Learning + DevOps + Data Engineering

MLOps is more than a buzzword; it's a necessary paradigm for efficient machine learning workflows. Integrating principles from:

Machine Learning: Here, we're not just developing algorithms. We're ensuring that these algorithms can perform optimally in varied environments. Practical challenges like model generalization, data drift, and overfitting come into play, and MLOps provides strategies to tackle these.

DevOps: The software development lifecycle has evolved with DevOps, emphasizing continuous integration, continuous delivery, and continuous deployment. By borrowing from this field, MLOps ensures our models are production-ready, always updated, and seamlessly integrated into applications.

Data Engineering: In the age of Big Data, handling vast and diverse datasets becomes crucial. Efficient storage, preprocessing, ETL processes, and ensuring data quality fall under this umbrella. With MLOps, we guarantee our models have consistent, high-quality data to learn from.

4.2 Continuous Training, Integration, and Deployment

In a changing world, models that aren't updated can become obsolete quickly. MLOps champions the cause of Continuous Training by:

Monitoring data for distribution changes.

Periodically retraining the model with new data.

Automated pipelines to integrate these newly trained models, ensuring minimal manual intervention.

Continuous Integration (CI) ensures any new changes, like model tweaks or feature additions, are tested and validated, preventing regressions. Automated testing of models is vital to ensure any new data or changes do not degrade its performance.

Continuous Deployment (CD) ensures validated changes are automatically reflected in the production system, allowing for quick iterations.

Services like Jenkins, Travis CI, or GitHub Actions can help set up these CI/CD pipelines. They can run unit tests, validate models, and deploy them seamlessly.

4.3 - Automation

Manually handling data preprocessing, model training, validation, and deployment isn't scalable. Automation in MLOps covers:

ML pipelines for data preprocessing and model training.

Automated hyperparameter tuning.

Automatic deployment of validated models.

Now that you know most of the terminology of how MLOps works, now we’ll delve into each of those components in more detail one post at a time.