MLOps 8: Packaging Your ML Models Part 2

From notebooks to production, Modular programming, Typical structure in most organizations

Be sure to go over Section 3 several times over. This is the file/folder structure that you will be dabbling with, on a daily basis.

Let’s get to it.

Table of Contents

From notebooks to production

Modular programming

Typical Structure in most organizations (complex & important)

1 - From notebooks to production

Jupyter Notebooks are powerful and popular for interactive data analysis. Yet, there's a stark difference between an exploratory environment and a production environment. Putting machine learning models into production is a key component of MLOps. The process of Python packaging and robust dependency management takes center stage.

But, Why can't we transfer our notebook logic directly into a production system? Here’s why…

1.1 Why we don’t use Jupyter notebooks in prod

Difficult to Debug:

Notebooks, by design, allow for non-linear execution. This means you can run cell 5 before cell 2, or run cell 3 ten times before moving forward. In a production environment, this non-linearity can introduce unexpected behaviors. This can make debugging a nightmare.

Moreover, error messages in notebooks can sometimes be less informative. This is especially true when wrapped inside notebook-specific constructs.

Require Changes at Multiple Locations:

As projects grow, so does the complexity of notebooks. It's not uncommon to find notebooks that span hundreds of cells. A required change might need changes in many locations throughout the notebook. An example of a change is modifying a data preprocessing step.

DRY Principle Violation: Duplicate Code Snippets:

DRY stands for "Don't Repeat Yourself." It's a software development principle aiming to reduce redundancy. In notebooks, it's commonplace to copy and paste cells, leading to repeated code. This not only increases the notebook's size. It also means that if an error is discovered in one snippet, it might be present in multiple places.

No Modularity in Code:

Notebooks encourage a linear flow. This might lead to large chunks of code handling many functionalities. In software engineering, it's a best practice to modularize code. Break it down into smaller, reusable functions or classes. This modularity is often missing in notebooks. This makes the code harder to understand, test, and maintain.

Here’s a decent video on writing “clean code”:

1.2 Development to prod

To ensure that machine learning models and their associated data pipelines are production-ready, a more structured approach than notebooks is necessary:

Python Packaging: Convert your code into reusable modules or packages. This allows for better structuring, testing, and versioning of your code. Tools like setuptools can help in packaging your Python code. Platforms like PyPI enable sharing and distribution

Dependency Management: When moving to production, it's essential that all dependencies are correctly specified. This ensures that the model and its data processing steps run consistently across all environments. Tools like pip, virtualenv, and pipenv can be invaluable. Always maintain an updated requirements.txt file.

Refactor and Modularize: Transition away from the linear structure of notebooks. Break down the code into smaller functions, classes, or modules. This not only makes the code more readable but also more maintainable and testable.

Continuous Integration (CI): Incorporate CI tools that automatically test your code whenever changes are made. This ensures that the production code is always in a deployable state.

Now, let’s focus more on the modularity aspect.

2 - Modular programming

In the vast ecosystem of Machine Learning Operations (MLOps), where the lifecycle of ML models spans from data extraction to real-time predictions, the necessity for organized and maintainable code can't be overstated. Enter modular programming—a paradigm that stands as the backbone of scalable, efficient, and collaborative MLOps. But what is modular programming, and why is Python, a go-to language for many ML practitioners, hailed as a modular language?

2.1 What is modular programming?

Modular programming is a software design technique. The functionality of a program is divided into independent, interchangeable modules. These modules are then integrated into a single unique system. This allows for increased reusability, scalability, and maintainability.

Key benefits include:

Reusability: Functions or classes, once written, can be reused across different parts of the project or even in other projects.

Maintainability: With functionality compartmentalized, it becomes easier to manage, debug, and update individual components without affecting others.

Collaboration: Multiple developers can work on separate modules simultaneously, speeding up the development process.

A nice introduction to modular programming:

2.2 What is a module?

In Python, a module is a single file containing Python definitions (functions, classes) and statements. It provides an avenue to logically organize your Python code. Grouping related code into a module makes the code easier to understand and use.

For instance, you might have a file data_processing.py with functions to preprocess data for your ML pipeline:

# data_processing.py

def normalize(data):

return (data - data.min()) / (data.max() - data.min())

def tokenize(text):

return text.split()You can then use these functions in another file by importing the module:

from data_processing import normalize2.3 What is a package?

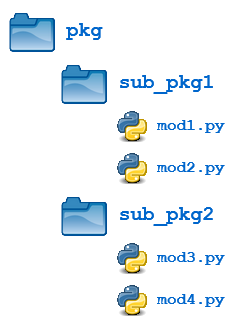

While a module is a single file, a package is a way of organizing related modules into a single directory hierarchy. Essentially, it's a directory that contains multiple module files and a special __init__.py file, which often is empty but indicates that the directory should be treated as a package or a module.

For example, if you have an ML project with several modules related to data processing, model training, and evaluation, you might structure it as:

my_ml_package/

|-- __init__.py

|-- data_processing.py

|-- model_training.py

|-- evaluation.pyYou can then import specific modules or functions from this package

from my_ml_package import data_processingHere’s a nice picture that explains the relationship between packages and modules:

3 - Typical Structure in most organizations

Now that we’ve gotten a lot of the definition stuff out of the way, let’s talk about what file/folder structure exists in most companies (as of today)

3.1 Essential Files: Building blocks

__init__.py: Typically empty, this file enables Python to recognize a directory as a Python package, making relative imports possible.MANIFEST.in: Ensures non-code files, like datasets or configuration templates, are packaged with the source distribution. It provides directives to include or exclude these additional files.config.py: A centralized hub for configuration. It holds constant variables, directory paths, and initial settings, thus boosting maintainability.requirements.txt: An essential for reproducibility, this file lists all the package dependencies and their precise versions.setup.py: For those aiming to distribute the package, this file contains package metadata and dependencies, streamlining package distribution and installation.

3.2 Data Management: the heart

Modules and scripts dedicated to data sourcing, processing, and model I/O operations:

Data Collectors:

retrievers.py: Manages data retrieval from external sources, such as APIs, subsequently storing this data in a SQL server.preprocessors.py: Responsible for data cleaning, normalization, or other transformations. The result? Data that’s ready for training or prediction.

data_management.py: Handles data and model I/O functions. Key for loading cleaned data and managing serialized ML models, often withjoblib.

3.3 Model Lifecycle: Training & predictions

The lifeline of any ML package, ensuring models are trained, evaluated, and utilized for predictions:

train_pipeline.py: Initiates the model training. It handles everything from fetching training data, running it through the pipeline, to model serialization post satisfactory performance.predict.py: Takes over once models are trained, orchestrating predictions using new data.pipeline.py: A consistent data processing series, often crafted usingsklearn's Pipeline. It ensures data transformations and model training processes remain consistent.

3.4 Testing: Ensuring robustness & stability

Quality assurance for the ML package, ensuring models and pipelines function as expected:

test_predict.py: Validates the prediction process. By pulling samples and running predictions, it ascertains the model and pipeline’s functionality.pytest.ini: A configuration file forpytest. It can dictate paths or set default command line arguments.

To execute the tests, typically, the following command is used:

pytest -vThere might be some slight deviations to this depending on the organization, but pretty much all of them follow this overall format.

With the basic structure out of the way, we’ll go into detail on one of the popular tools that companies use today to manage and track the ML Lifecyle, and files talked about above.

Here’s a nice little hint: