MLOps Part 3: Apache Airflow

Data Pipelines, Workflow Orchestration Tools, Where Airflow Outshines Step Functions, When Not to use airflow, Airflow Main Components, Sample Code

Credit for this article goes to BowTiedCelt. You can see his substack which covers data engineering in more detail.

Note: All Machine Learning Engineers have used, or will use Apache Airflow at least once in their lifetime.

Let’s talk about why you NEED to be at least be familiar with Apache Airflow. What do AirBnB, Adobe, Coinbase, Dropbox, GitLab, LinkedIn, Shopify, Stripe, and Tesla all have in common?

They use Apache Airflow. Airflow is being adopted at BIG tech companies because of the power, ease of use, and low learning curve. Not learning Airflow puts you behind those who want to be data engineers at tech companies.

Table of Contents

Data Pipelines

Workflow Orchestration Tools

Azkaban

Conductor

AWS Step Functions

Apache Airflow

Where Airflow Outshines Step Functions

When Not to Use Airflow

Airflow Main Components & Flow

Sample Code

YouTube Playlist

DAGs

UI

1 - Data Pipelines

In a world where the most profitable companies are data-driven. The importance of data & data pipelines increases with the exponential increase of data. This makes sense because the data is the raw resource that drives insights and profits. It also means that we must clean, wrangle, and have the data ready for consumption.

Data pipelines are this process.

Generally, data pipelines follow this rough blueprint:

Receive or fetch data

Clean, wrangle, massage the data.

Validate data

Export so desired persona or system can consume

Of course this is an oversimplification, but most pipelines follow this blueprint. Another way to look at data pipelines is through the graph data structure. Directed Acyclic Graph (DAG), which is a fancy way to say a graph that is pointed (meaning there is a direction flow) and there are no cycles. This is helpful because data pipelines can become complicated the flow control.

Here is a vid on DAGs:

The algorithm used for DAG pipelines is roughly logically as follows:

If task is not complete: check upstream dependency

If upstream complete execute task

Else continue looking for most upstream uncompleted task.

This dependency model makes sense because data pipelines rely on an earlier step.

For example: you cannot clean data until you retrieve or receive the data.

2 - Workflow Orchestration Tools

There are a couple tools in the workflow/pipeline that should be considered with Airflow. Here’s some of the pipeline scheduling tools:

2.1 Azkaban

Azkaban out of LinkdIn, is used for Hadoop job scheduling. The jobs are defined in a Domain Specific Language (avoid). The project is written in Java. This project has been around for 7 years.

The 4 big issues with Azkaban from my perspective are:

Cannot trigger by external event

No native task waiting support

Native web auth method is xml password

Weak observability and monitoring capabilities

This makes sense as Azkaban was generally designed for Hadoop job scheduling. And, it was designed for a general purpose data pipeline/orchestration tool.

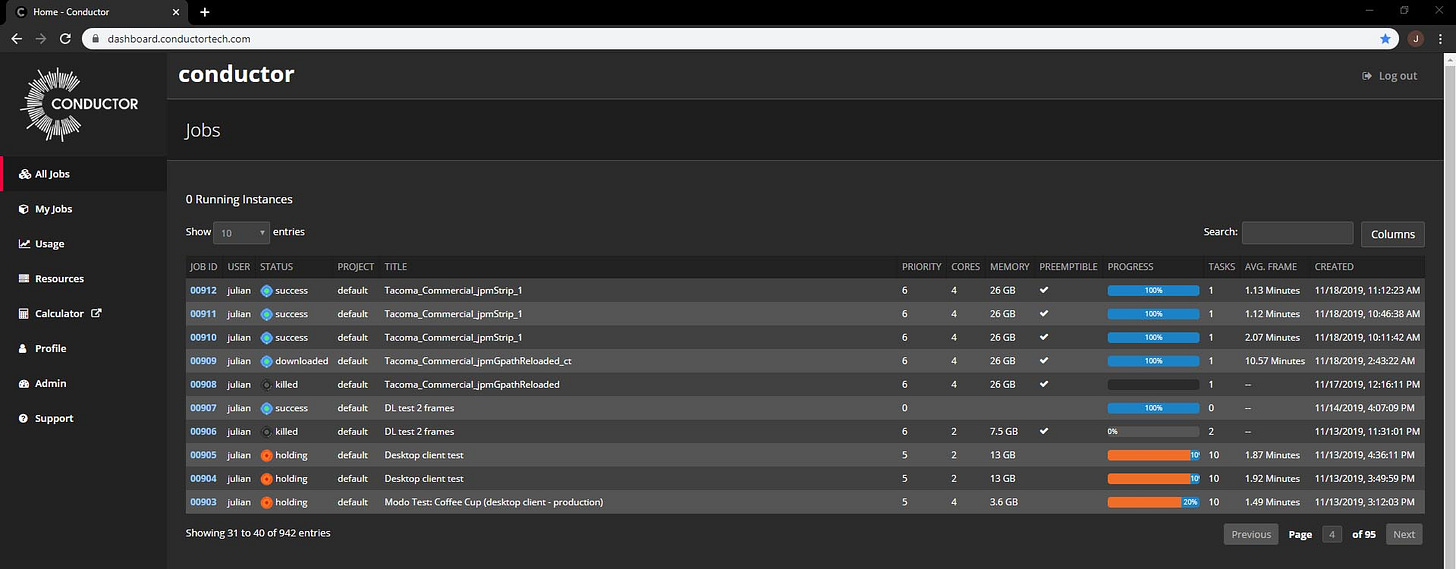

2.2 Conductor

A young project out of Netflix which is coming up on the 2 year mark. Conductor is for microservice orchestration, but some people use it for general workflow orchestration. Again written in Java. I could see Conductor maturing into a decent project for workflow orchestration.

But, the biggest 4 problems i see right now are:

Lack of cron job support

No native web auth

Cannot trigger by external event

Weak observability and monitoring capabilities

Generally the UI on Conductor is weak. Would love to circle back to this project in a couple years to see where it is.

2.3 AWS Step Functions

If you are an AWS shop you may already have some step functions running, but there are some limitations with step functions that vex me.

Some of the main problems we have had with Step Functions are:

Limited observability and monitoring capabilities

No UI

Only can be used by AWS users (the future is multi-cloud)

Lack of retry capability

Step Functions can be a simple tool to leverage for aws native workflows. But, the limitations around no UI and no retry capability are holding this service back.

2.4 Apache Airflow

We have the focus on this post: Airflow. A project out of AirBnB which was open source from the very first commit. Task definitions and the project itself are written in Python, which is a huge plus. Airflow has only been out around 4 years but is maturing.

The community is very active and helpful. Being in airflow is flexible and extensible, making many things possible. Enterprises love flexibility in this sense. Meaning we can customize it with existing resources.

The UI is solid for a tool in this space. Airflow also has good monitoring and observability. Airflow is also offered as a managed service by Google Cloud in the form of Cloud Composer and AWS in the form of Managed Workflow for Apache Airflow.

3 - Where Airflow Outshines Step Functions

When the use cases involves services outside of AWS native services Airflow has advantages over Step Functions. In multi-cloud workloads Airflow is preferred over Step Functions.

Secondly, if your workflow requires custom behavior (even as simple as retry) or custom connectors Airflow has an edge vs Step Functions. Even a workload with a simple requirement of a retry makes airflow a stronger fit.

The last thing to consider is that Step Functions incurs costs per 100 state transitions. Now this can be problematic for bulk transaction processing where state transitions will run you up a bill.

Airflow has some general success stories documented here

4 - When Not To Use Airflow

Airflow is not a streaming service. From the Airflow Docs:

Airflow was built for finite batch workflows. While the CLI and REST API do allow triggering workflows, Airflow was not built for infinitely-running event-based workflows. Airflow is not a streaming solution.

However, a streaming system such as Apache Kafka is often seen working together with Apache Airflow. Kafka can be used for ingestion and processing in real-time, event data is written to a storage location, and Airflow periodically starts a workflow processing a batch of data.

The above is from Apache Airflow’s official documentation.

Airflow is designed so that DAGs can be written in python. You also get the advantage of version control, automation, CI/CD, and several others. Airflow was not designed for people to create workflows in a UI like some other tools in this space.

There are AWS services where you have this visual workflow designer, airflow does not.

5 - Airflow Main Components & Flow

First the Airflow Scheduler which sends DAGs to workers (queued) for execution. Second are the workers, workers ingest tasks from the queue and run the job. Third, the airflow database which acts a metadata store. And last is the webserver which provides a rich UI to inspect DAGs and statuses.

The Scheduler is really the core engine of airflow. The scheduler interprets the DAGs, manages the dependencies of DAGs and schedules them for execution, and does this in a loop basically. The scheduler is also what writes tasks to the job queue for workers.

The workers are where the computation or jobs are actually done. Popular to have works be containers in a kubernetes pod for easy scaling. The workers are writing updates to the database.

The Webserver is then querying the database to display statuses and other relevant information in the UI.

6 - Sample Code

6.1 YouTube Playlist

If you want a youtube playlist you can follow along, and try some code out:

6.2 DAG

Looking at the airflow docs we can see a good example of a simple DAG and what the UI looks like.

+-COPYSelect LanguageBashCC++C#CSSGoHTMLJavaJavaScriptJSONMarkdownObjective-CPHPPythonRRubyRustSQLSwiftTypeScriptYAMLfrom datetime import datetime

from airflow import DAG

from airflow.decorators import task

from airflow.operators.bash import BashOperator

# A DAG represents a workflow, a collection of tasks

with DAG(dag_id="demo", start_date=datetime(2022, 1, 1), schedule="0 0 * * *") as dag:

# Tasks are represented as operators

hello = BashOperator(task_id="hello", bash_command="echo hello_world")

@task()

def airflow():

print("r sucks")

# Set dependencies between tasks

hello >> airflow()This is a DAG that will run once per day starting Jan 1 2022. This DAG contains two tasks, the first being `hello` which is a bash operator that runs a bash script and a python function defined with the `@task` decorator. the `>>` creates a dependency to control the order of execution.

Note: If the code is a bit of a nuisance to read in Python, you can grab the Code Highlighter from Hal.

6.3 UI

Here is what the UI would look like for this DAG:

Notice our tasks and dependencies have been visualized in a flow way that makes sense.

This is what a collection of runs would look like. You can see the total tasks, what type of operator they were using, and more metadata about the runs. Views like this in the UI allow for operators to view metadata about runs.

Views like this are not found in some of the other tools we discussed today.