Probability Fundamentals & The American Income

Stats Analysis 2: Introduction to Probability, Expected Value, Variance (std dev). Will do some distributions in the next one, and talk about conditional probability.

Required Readings

Table of Contents:

Where you will use this

What is Probability

Expected Value

Variance

1 - Where you will use this

Every single ML model is basically just a super advanced statistical model. If you understand core statistical concepts, then you will be able to understand the core concepts that make all of these models run.

Certain ML models are meant for certain types of data, the key is to understand what type of data you have early on, that way you can make sure you send the appropriate ML model towards it to do the job.

Note: If you want to follow along, you can download the dataset here. You should make an account on kaggle if needed, as this is like a central hub for doing Data Science competitions, we will come back here often. I’d also recommend holding onto this dataset, as we’ll use it later on as well.

2 - What is Probability

In a nutshell, probability is basically how likely a specific outcome will happen. In probability, the number scales from 0 all the way to 1. 0 means the outcome will never happen, and 1 being it is guaranteed to happen.

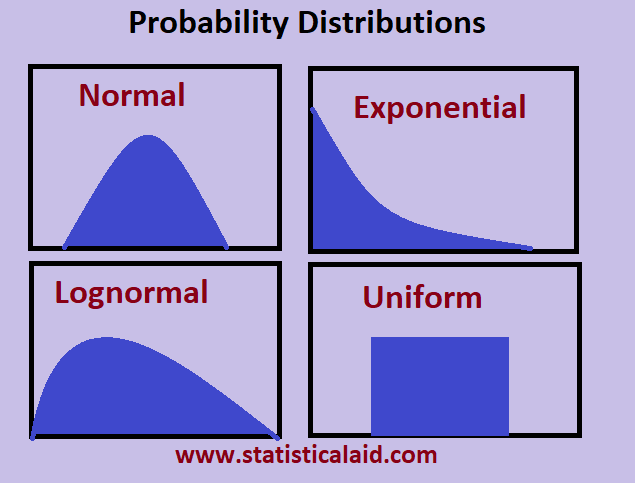

In probability, we have multiple different models that assume different probabilistic distributions. For example, we have something called a uniform distribution that is basically linear, and assumes the probability of every event will stay the same. We also have things like exponential distribution that say the bigger the number we have, the more likely the outcome. Here is a simple visual reference guide:

Keep reading with a 7-day free trial

Subscribe to Data Science & Machine Learning 101 to keep reading this post and get 7 days of free access to the full post archives.