Our previous examples have assumed that we already had a labeled dataset to start from, and that we could immediately start training a model. Unfortunately, in the real world, you don’t start from a dataset, you start from a problem. Examples below:

Flagging spam and offensive text content among several posts of a chat app

Building a music recommendation system for users of an online Spotify clone

Detecting credit card fraud for an e-commerce website

Using satellite images to predict the location of as-yet unknown archaeological sites

Unfortunately you can’t just import the data from keras, throw in sklearn or a deep neural net, and call it a day. In the real world, it usually follows a 3 step process.

Define the task - Understand the problem domain, and the business logic underlying what the customer asked for. Collect a dataset, understand what the data represents, and choose how you will measure success on the task

Develop a model - Prepare your data so that it can be processed by a machine learning model, select a model evaluation protocol and a simple baseline to beat, train a first model that has generalization power and that can overfit, and then regularize and tune your model until you achieve the best performance

Deploy the model - Present your work to the stakeholders, ship it to a web server, app, or something else. Start collecting the data you need to improve the above model (if needed), and voila

Let’s dig deeper

1 - Define the task

You can’t do any real work until you figure out the context of the problem. WHY is the person trying to solve this specific problem. What value will they get from the solution. How will your model be used? How will it fit into their business process? What kind of data is already available? How can additional data be collected, etc…

1.1 Frame the problem

Here are some things you’ll want to consider when looking at the problem

What will your input data be? What are you trying to predict?

What type of ML task are you facing? is it binary/multiclass classification? scalar/vector regression? multiclass/multilabel classification? ranking? or something else?

What do existing solutions look like? maybe your customer already has an algorithm that handles spam filtering or credit card fraud detection

Are there any specific constraints you’ll need to deal with? for example, you could find out that the app for which you’re building a spam detection system is strictly end to end encrypted, so your model will have to live on a person’s phone (aka not that many resources available)

1.2 Dataset Collection

Once you understand the nature of the task, and you know what your input & targets will be, it’s time to collect some data. Just a heads up, this will be the most time consuming part of most ML projects.

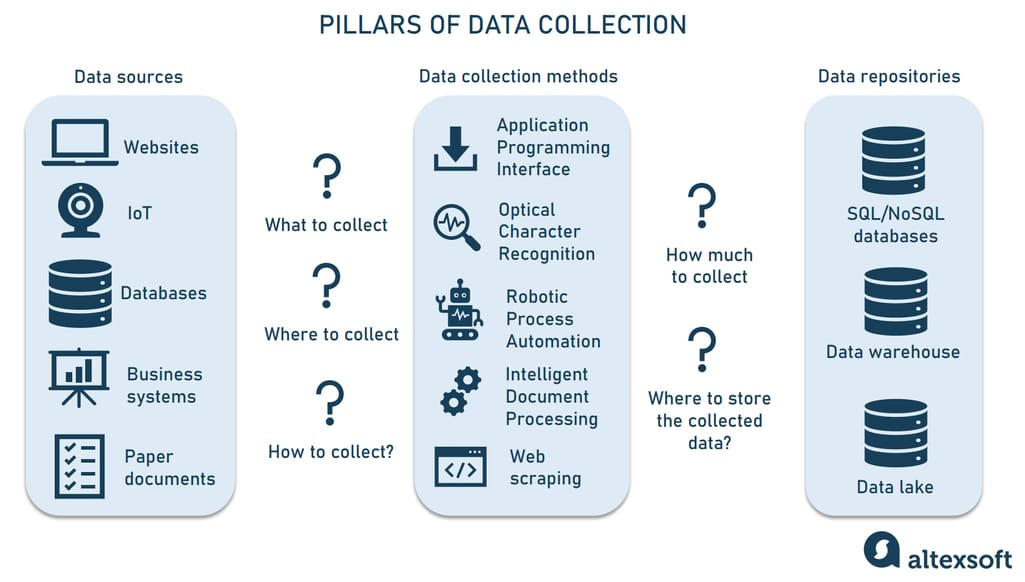

I can’t really give you a specific guide here, as there’s so many different ways to tackle this. maybe you’ll need to scrape it, maybe buy it off the internet, maybe pay for an API, or something else. can’t really say much here.

Sometimes, even when you get the data, you can find out the targets are unlabeled.

1.3 Beware of non representative data

ML models can only make sense of inputs that are similar to what they’ve seen before. As such, it’s critical that the data used for training should be representative of the production data. This concern should be the foundation of ALL your data collection work.

Let’s say you’re building an app where users can take pictures of a plate of food to find out the name of the dish. You train a model using pics from an image sharing facebook group. When you deploy the model, and look at the feedback…. you find a bunch of angry users complaining that your app gets the answer wrong 9/10. Wtf just happened?

Well… when you look at the photos from the facebook group, you could quickly see that most of the photos uploaded there are professional photos at really expensive restaurants, and when users use the app, they are just using basic cellphone photos, the two create a mismatch, and voila your ML model’s accuracy falls off a cliff.

This is an example of when your training data wasn’t representative of the production data.

2 - Develop a model

Once you know how you will measure your progress, you can get started with model development. Luckily this is the easy part.

2.1 Preparing the data

As you’ve already seen, deep learning models don’t really ingest raw data. Data preprocessing aims at making the raw data at hand more amenable to neural networks. This includes vectorization, normalization or handling missing values.

Vectorization: All inputs & targets in a neural network must be tensors of floating point (decimal) data. Whatever data you need to process (sound, images, text), you’ll need to first turn into tensors. We call this step data vectorization.

Value Normalization: Recall from the digit classification that we did a while back, we loaded up the photos of digits. Each photo had values encoded from 0 to 255. Before we passed this off to our ML model, we had to normalize the data from 0 to 1. In general, it isn’t safe to feed into a neural network data that takes relatively large values, or data that is heterogeneous.

Handling Missing Data: There’s a bajillion posts on my data skills posts on this substack. You pretty much know how to take care of this.

2.2 Beat a baseline

As you start working on the model itself, your goal should be to achieve statistical power. That means figuring out some sort of a sensible baseline, and making sure your ML model does a better job than it.

Example: if predicting between 2 classes…. does your ML model do a better job than just a simple coin flip? what if one of the classes has lots of samples, and the other does not?

3 - Deploy your model

You can read this post on deployment if you just want the code:

MLOps 16: Deployment on AWS

***This post is too long for email, please visit the webpage to read this fully***

But, for the other side:

3.1 Setting expectations with stakeholders

Stakeholder trust is about consistently meeting or exceeding their expectations. The actual system you deliver is only half of that picture, the other half is setting appropriate expectations before your ML model is deployed.

Once you work in the real world, you’ll find that the expectations of non technical people towards AI systems are often unrealistic.

Can’t tell you how often I’ve had stakeholders say “oh the AI can figure it out, I’m sure”

3.2 Ship/Deploy the model

Just be sure you know how it will be deployed:

Rest API?

On a web app?

On a mobile phone?

Within another service/app?

Then, start the code to start working on it

3.3 - Monitoring

Once you’ve successfully deployed a model, this is still not the end. The next thing you’ll need to do is monitor it’s behavior, it’s performance on new data, it’s interaction with the rest of the application, and most important: the impact on the actual business.

Here are a few things to consider:

Has user engagement in your app gone up or down after deployment?

Has the overall accuracy of the model gone up or down?

Is there any specific user feedback?

If it’s possible, you should try to do a regular manual audit of the model’s predictions on production data.

3.4 Maintenance

No model rules forever. You already know what concept drift is (over time, the characteristics of your production data will change, and this will slowly over time reduce the performance and relevance of your model). Here are a few things to keep an eye on:

Watch out for changes in the production data. Are new features becoming available? Should you expand or otherwise edit the label set?

You should keep collecting new data, and keep improving your pipeline over time. More specifically, you should pay special attention to collecting samples that your ML model is having issues classifying. These samples are the most likely to help improve performance