Machine Learning Operations (MLOps) Part 2

You have about 7 to 10 years to fully know this stuff by heart. This is just a deep dive on what the cutting edge of tech are dealing with, and if you want to become a MLE, you 100% need this.

This is going to be a bit complex, so take your time when reading this. Click on the links to see the official documentation when available. Everyone reading this has about a good 7 to 10 yrs to prep for MLOps before every data team starts using this stuff.

If you have questions, you can leave them in the comments. The bear is friendly.

Oh, but you will need to learn this at some point. If you think you don’t need to learn MLOps, this is going to be you:

Click here to see MLOps Part 1

Table of Contents:

Finding Your Way

Deep Dive

Solutions

WrapUp

5 - Finding your way

I encourage you to analyze your challenges and then decide how far you want to take it for each of them. Do not fall for the most shiny option out there, it may be a complete overkill for your situation.

The more sophisticated solutions will typically require a larger upfront investment and you need to assess if the benefits justify it.

There are a few typical challenges that drive the need for more sophisticated solutions:

Fast paced environments:

Any situation that does not give you a lot of time to react usually requires robust automation. Think about algorithmic trading for example, you certainly would not want to make bad decisions for hours.

Here’s a costly algorithmic trading mistake ($440 mill lost)Legal requirements:

For many applications you need to be able to proof how your ML-solution made a decisionComplex architecture:

Whenever errors in your models may cause widespread issues in downstream applications

6 - Deep Dive

Let us take a closer look at one of the dimensions and show you different options for model performance management. The goal here is to make sure that your model makes the right decisions as time moves on.

Note: Any Data Scientist/MLE who wants to work with time series analysis. Pay attention (This includes Quants)

Basic Data Architecture

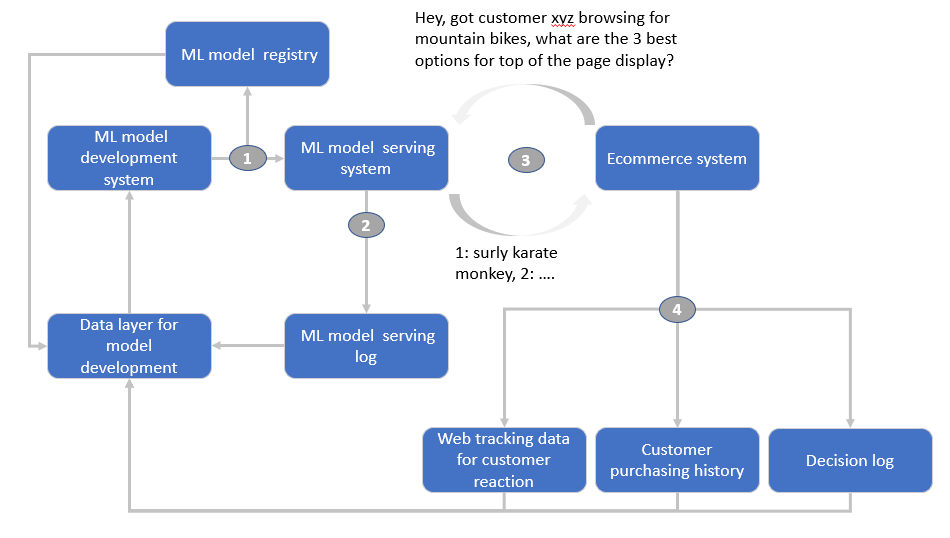

Let us use a simple ecommerce case as an illustration:

With such a fairly common architecture none of the involved systems has full data so you need to make sure that you bring them together. I will describe my recommendation for functional building blocks that allow model performance management:

The Model Registry: keeps track of each model you deploy for usage.

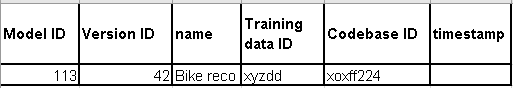

The most basic version of this could be a database table like this:

The idea here is that you get a Unique Identifier (Primary Key in SQL) for each model and can get back to the exact code base and data you used to build it.

Keep a log for each time the model has been called:

At a most basic level you can simply store request and response data. Better solutions are possible, but this is the minimum. Note that we are storing the unique combination of model and model ID here, this allows us to pinpoint exactly which version was used for the decision if needed.

The unique case ID will be handed over to the downstream systems together with the decision.

You would typically also implement a feature to test alternative models or random assignment with a certain percentage of the cases.

Our recommendation model is called from the ecommerce system and responds. You would often fetch additional data for the customer, but we keep it simple here. Here’s an ecommerce business’ SQL database example:

In our example the model is supposed to deliver the best 3 options to display on the top of the page for this customer. Usually you would calculate something like a customer specific affinity score for a set of possible products, rank them by score and pick the highest 3.

You need to account for the possibility that the best option for this customer is currently out of stock and should not be recommended then. This effectively means that you implement a business rule that discards out of stock products.

The way I sketched it here, our ML serving system does not have stock level information, that is why we hand over three options under the assumption that one will always be available.The ecommerce system picks the highest ranking available product and renders the page with it. Because the ecommerce system now has options, you need to record the final decision here.

Note: You now get to see why Data Wrangling skills are important. Most of your time will be spent trying to figure out how to code the above tables, not on running ML algorithms.

Imagine the business rule set as a decision tree where each leaf (tree endpoint) has a unique ID. If you can pull up the exact version of the rule set and know the leaf ID, you can understand how this decision was made when you need to.

A Word of Warning: the attribution of revenue to certain customer interactions is not trivial and will often cause extensive arguments - there is money on the line after all. The data collection described here should allow you to track model success for most common attribution scenarios.

If you honestly want to test if your model adds to the bottom line, I would compare performance against a random or some form of baseline recommendation.

Solutions

All the features I have described above can be achieved with some fairly simple software components, a database and some operations procedures. You could simply leave it at that and write a few python scripts to check your models performance in regular intervals.

You can now decide how far you need to go to match your situation.

Now let us have a look at some commercial options:

I encourage you to take some time and understand what those solutions really bring to the table. I will leave it at a short list of typical product features here:

Pre-build data schemas for most of what I described above and usually much more

Ready to use software components to capture the tracking data

Out-of-the box deployment options such as REST API model endpoint creation on the push of a button

Simple way to create monitoring dashboards

Alerting

Some capability to automate workflows such as re-training

Good model management will most likely be a continuous effort for your team with any toolset.

Wrap Up & More Content

I hope that I gave you a good overview of the things you should consider for a machine learning solution. There are of course many more details to discuss, but this should be a good starting point.

We’ll be continuing with the MLOps section with a R/Python library called Apache Airflow. Apache Airflow is used to create, and maintain data pipelines by effectively letting you schedule tasks.

Cloud Engineering with BowTiedCelt will be handling the introduction for it.

Apache Airflow is kind of like Window's Task Scheduler.