Python Data Skills 1: Fetching CSV Data

If you think this will just be me teaching you how to use the pd.read_csv() method, then you haven't spent enough time on this substack yet ^_^

When working with tabular data in Python, we can use the pandas library. From there, we can use the pandas.read_csv(), or more commonly used: pd.read_csv() to fetch csv data, and turn this into a pandas dataframe.

Great, awesome. Sounds simple enough right?

Now, let’s talk about the massive list of things that can go wrong, and the solutions to solve those problems.

By the way, this post is only meant for those going the route of a:

Quant

Machine Learning Engineer

Data Scientist

If you are not trying to interview for one of the roles above (or those like it). Then, you’re wasting your time reading this series. Go read the SQL series first to get the SQL skills to land a Data Analyst role first. Then, you can learn about Data Skills in Python.

Table of Contents

What are Data Skills?

CSV Files

Pandas Data Types

Header On 2nd Row

Handling Low Memory

Handling Dates

1 - What are Data Skills?

1.1 What are Data Skills

Data skills refer to the ability to collect, manipulate, and wrangle data. This encompasses a wide range of technical skills and knowledge areas. We will use programming languages like Python, R, or SQL for this a lot.

2 - CSV Files

A Comma Separated Values (CSV) file is a plain text file that contains a list of data. These files are used for exchanging data between different applications, as many systems support CSV format. This makes it a standard way for doing data exports/imports. Each line in a CSV file corresponds to a single data record, and each field in that record is separated by a comma. Though other separators (like semicolons or tabs) can be used as well. The term "CSV" implies the use of the comma.

The first line of the CSV file acts as a header row, listing out the names of each of the fields.

3 - Pandas Data Types

When you are working with Pandas (most commonly used library for wrangling data), there are 3 main data types, we’ll look at:

Series: This is a 1-D labeled array. It's capable of holding any data type (integers, strings, floating point numbers, Python objects, etc.). It is a column in an Excel spreadsheet.

DataFrame: This is a 2-D labeled array with columns of different data types. You can think of it like a spreadsheet or SQL table.

Panel: It's a three-dimensional data structure, which can be imagined as a dictionary of DataFrames.

3.1 Loading a CSV File

You can use the pd.read_csv() function to load up a CSV file as a pandas DataFrame into your memory. Click here for the documentation.

90% of the time, this will get the job done.

However, you don’t get your big bucks for these 90% of the time. You get your real income for the other 10% when things don’t go as intended.

Now, let’s talk about a brief list of things that can go wrong, and how to address them.

These are perfectly valid questions I can ask you during the interview, and I expect you to get them right.

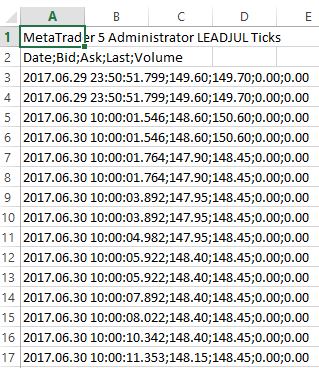

4 - Header On 2nd Row

One of the most frustrating things you’ll deal with is fetching data from those outside of IT roles. A lot of times, their csv file will have awful formatting. One of the most annoying things is when they don’t have the column names on the first row.

See image below:

If we just tried the default method:

pd.read_csv('my_csv.csv')This will result in Pandas giving us basically junk as the column names.

One method (not recommended) is to do:

Get a list of all elements in the 2nd row

Get Pandas to drop the 2nd row

Get Pandas to rename the columns to the above list

Drop the list (as a variable)

Or, if you got solid data skills, you just use the skiprows parameter. Here is the documentation for it:

Here is what it would look like in code format

pd.read_csv('my_csv.csv', skiprows=1)5 - Handling Low Memory

Another common problem you’ll run into (especially if you are pulling daily pricing data) is that you’ll get too many rows within your csv. This will result in your running out of memory when you pull data.

Let’s talk about how to handle it.

5.1 Low_Memory

The first solution is to use the low_memory parameter. Here is the documentation of low_memory:

When low_memory is set to True, the CSV file is processed in chunks (internally), and this uses less memory while parsing. Keep in mind that using this method will lead to longer wait times. It could also lead to pandas mis-interpreting the data types in the columns in different data chunks.

5.2 Chunksize

Here is the documentation for chunksize.

This parameter is used to read a file in chunks of a specific size. This is useful for large files that don't fit in the memory. When you specify the chunksize parameter, read_csv returns an iterable object. You can iterate over this object (with a for loop) to get chunks of the dataframe of the specified size.

This allows you to process large files in manageable chunks, rather than having to load the whole file into memory at once. Here is a code example that reads a lot of rows using chunksize:

for chunk in pd.read_csv(my_file, chunksize=100):

# chunk is a DataFrame of 100 rows. The code below is a for loop that prints out each section of 100 rows.

for index, row in chunk.iterrows():

print(row)Now, we can work on our csv small chunks at a time. Here is the major difference between the two:

low_memory affects how the file is internally processed to optimize memory usage. While, chunksize lets you load and work with small sections of large files at a time.

6 - Handling Dates

Another headache when trying to load data from a CSV is when we are dealing with Dates. In R, life is easy, you just use the lubridate library, and then use as.Date(), and call it a day. Unfortunately, Python isn’t that simple. Here is a list of different date types that exist within Python:

datetime.datetime

datetime.date

numpy.datetime64

pandas.Timestamp

pandas.DatetimeIndex

datetime64[ns]

object (yes really…)

When you try to load up a csv in pandas, sometimes Pandas will have issues when dealing with date type columns. If it gets issues, you’ll now get a data type called “object”.

To handle this problem, when are are loading up a csv in pandas, and we have a date column, we can use the parse_dates parameter to get Pandas to treat them as a datetime64[ns] column.

Here is the documentation for parse_dates:

Here is what it would look like in the code format:

pd.read_csv('my_csv.csv', parse_dates = ['date'])This would give us the following:

![dtype as datetime64[ns] is great for handling time issues dtype as datetime64[ns] is great for handling time issues](https://substackcdn.com/image/fetch/$s_!nNZ9!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F849073b2-bdb3-43db-ba3b-f9f0a006bc57_534x116.png)